The 4-D approach to dynamic vision and its applications in the field of cognitive robotics has gained more general importance by the attention recently shown by neurologists, psychologists and philosophers to the field of a ‘predictive mind’ as general basis for cognition (see ref. {7.1 to 7.7] below). Feedback of prediction errors allows building an internal representation of the actual environment with minimal time delay – and, according to the references – with minimal free energy (in the terminology used there).

In engineering terms, the following steps have proven to be efficient and essential:

- Install a spatiotemporal representation of the environment around the point ‘here and now’ (4-D approach) by exploiting predefined 4-D models,

- Minimize delay times by feedback of prediction errors (from whatever types of features measured and/or observed).

- The spatiotemporal models are to describe motion processes of objects (including other subjects (agents)) in the real world. Image features are only intermediate elements for adapting the top-down 4-D models for real-time perception to the bottom-up 2-D features of objects measured in the actual image. By feeding back prediction errors (exploiting feedback-matrices derived from the linearized spatiotemporal models for the process under scrutiny at the 4-D point ’here and now’), both the models hypothesized and the actual state of the variables involved may be improved. This has to be done by each individual subject for each object observed (including other subjects).

- The corresponding model-base has to deal with individual subjects (sensing and acting agents) and their perception processes, as well as with their mutual communication in order to improve the generality and applicability of the models jointly developed and the state of objects estimated.

- The 4-D approach is structured as a hierarchy of overlapping pairs of bottom-up and top-down processing levels.

- There will be no final truth about objects and subjects and their movements perceived in the real world, but only best-fitting hypotheses.

- Similar objects may be grouped into classes with certain properties, like stationary obstacles, cars, trucks, houses, trees etc. For each class typical parameters for shape and size form a specific knowledge base.

- Also subjects may be grouped into classes; however, class members have to have similar capabilities in perception, behavior decision, and motion control depending on the situation encountered.

- Typical capabilities in perception and motion control are additional knowledge elements for subjects. Certain time histories of control output result in typical maneuvers like ‘lane changing’, ‘turn-off onto a cross-roads’, ‘avoiding an obstacle by steering and/or braking’ etc.

- The most critical point always is to come up with proper 4-D object hypotheses for groups of features observed moving in conjunction. This initialization step can be made efficient by defining classes of applications with typical objects and subjects usually encountered. Typical examples are traffic on a highway, traffic scenes in an urban environment, or on cross-country state- (or minor) roads.

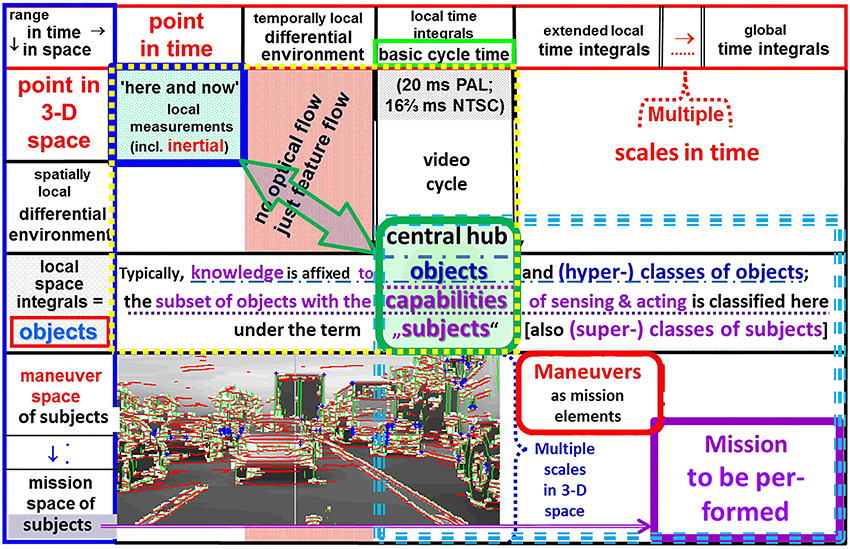

- Three layers of data and knowledge processing result in both space and time

The complexity of the three layers for efficient visual perception is sketched in the following matrix:

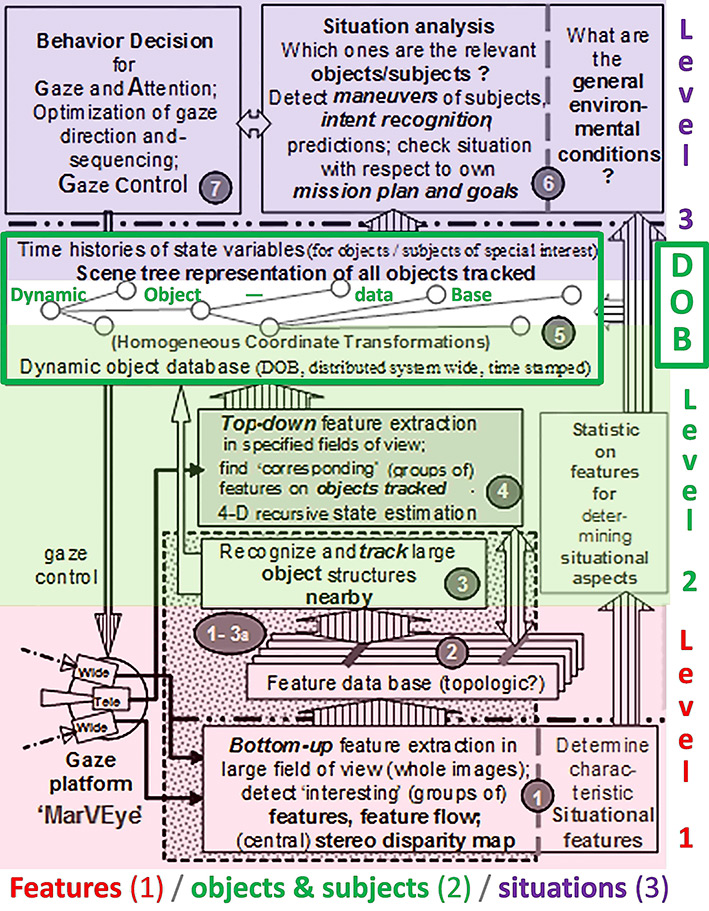

The parallel use of this structure in visual dynamic machine perception as developed over the last two decades of last century at UniBwM is indicated in the two figures below at the side, showing a) the three levels of data and knowledge processing, and b) the multiple feedback loops within and crossing the three levels.

a) Three parallel levels in processing, encompassing different time scales and knowledge bases.

At the bottom is the feature level (shown in pink) with two basically different components: One is exclusively bottom-up starting from the pixel data and looking for a set of general image features as base for all further processing (e.g. the ones shown in the figure above in the lower left corner). The second component is guided top-down by the higher-level perception processes: Here additional special features of objects hypothesized are sought in specific image areas. This step may take active gaze control into account, including object tracking and saccadic jumps in gaze direction. During saccades all features, also those from the (bottom-up) first component, are disregarded since the fast turn rates of the ‘eye’ lead to image smear. During these very short periods of time the system relies entirely on predicted feature values.

The goal of looking at combinations of features over time is to detect objects (including other subjects) and their movements in 3-D space (not in the 2-D-images). This difference is very important since otherwise a series of complications due to occlusion and ego-motion may occur. The checking of an object hypothesis usually takes a few video cycles before it is announced to the higher level perception system. This step is done by writing the then best estimates of model parameters and state variables into the ‘Dynamic Object dataBase’ (DOB, in green). This DOB is realized by a ‘scene tree’ that contains in a special form (quaternions) all characteristic data regarding the object type and its relative state (including motion parameters) relative to the perceiving agent (the ego-subject). The transition to state variables of objects reduces the data volume by 2 to 3 orders of magnitude as compared to the feature data describing the same object. This level 2 is shown in green color in the figure.

The top level 3 in visual perception and motion control (magenta in the figure) is the situation level, where mission performance has to be achieved taking the specific task given and the actual (unknown) environment encountered into account. On this level the exchange with humans may take place. More details may be found in the main section of this website and the references and dissertations given there, as well as in the book [Dickmanns 2007].

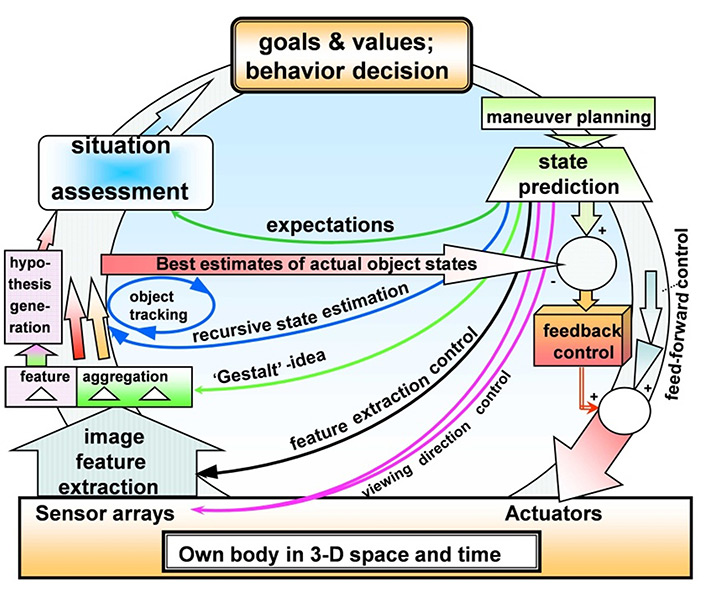

b) The multiple feedback loops within and crossing the three levels.

Since perception during action is the main task for a subject in real life, knowledge representation in parallel on all levels of perception, situation understanding, decision making and output of control will make a system easily adaptable and efficient. The following figure gives a coarse understanding of the multiple feedback loops required for an efficiently acting subject. As top guideline for behavior decision, the couple of ‘goals and values’ have been selected here. A goal may be the safe performance of a mission (no damage to the own body) to be performed; a value may be the fact that during this mission no other subject must be hurt, or no other valuable object must be damaged or destroyed. Achieving the mission in minimal time, with minimal energy needed or at minimal costs are further typical values.

About half a dozen feedback loops are shown in the figure, the outer one concerning the mission as a whole. The double-lined pink arrow indicates gaze control for keeping the most interesting part of the world in the viewing range. The black arrow has the goal of making image feature extraction most efficient. The light green arrow supports hypothesis generation, taking the actual mission and situation into account. The blue arrows alleviate object tracking and recursive estimation of their relative state. The uppermost arrow in dark green supports situation assessment taking all information on objects and subjects actually observed into account.

A somewhat more detailed representation of this steadily running process, emphasizing the separate two worlds, is shown in the next figure. At left, the real world and at right the internally represented one of the perceiving subject is shown. The figure emphasizes the different capabilities and activities like detection, tracking, and learning (on levels 1 to 3) for image features (lower center), individual objects (center), and the situation dealing with many objects (including other subjects) as well as the task to be performed.

Feature assignment to objects is a critical step; all features not assigned to recognized objects (1 to n above the straight green line) have to be checked especially for coming up with new object hypotheses (upward left diagonal, shaded in pink). Only after a few image cycles with small prediction errors for each new object is this one added to the list (increasing the number n to (n-new) = (n-old)+1). The tracking loop at right leads to the best estimates for the state- and shape parameters of each object; due to the spatiotemporal models even estimates for the velocity components may be achievable.

By comparing the predicted object parameters with the ones newly estimated, also an improvement of the object model may be possible (the learning loop in the upper center). The great benefit of the 4-D models for objects is that they may be used for properly setting the elements of the feedback matrix for the prediction errors in the estimation process. This even allows a) recognizing model parameters not improvable by the actual measurement results, or b) eliminating features that do not contribute to improving the estimation process for some model parameters of an object [Dickmanns 2007]. Only the 4-D approach does have this property. If all prediction errors are small, the internally represented world (at the right-hand side) will allow behavior decisions fitting to the actual situation. The resulting temporal control output is then applied to the real world components (gaze and/or vehicle control, red curve at the top). For an example realized as result of the ‘AutoNav’ project (2001 and 2003) see the video at the end of R7.

This approach to vision for autonomous vehicles is basically different from the ones developed in the USA for the ‘Autonomous Land Vehicle’ (ALV) in the framework of the DARPA-project ‘Strategic Computing’ from 1983 till 1989 [7.8] and the ‘Grand-‘ and ‘Urban Challenges’ in the first decade of this century. This latter ‘confirmation-type’ vision relies on known information on the environment in which the mission is to be performed. Our ‘scout-type’ vision system is designed to recognize the environment including the roadways and their actual parameters all by itself. In [7.9] the differences between the two approaches are sketched. The confirmation-type approach needs recent information on the environment, which makes it less practical for application in unknown areas or after harsh weather conditions or some major damages due to explosions or Earth-quakes.

Recently, after the successes of deep-learning algorithms with the computing power now available, this technology is also applied to the field of recognition of traffic scenes. However, safety aspects may yield strong arguments against its general application. In the long run, the 4-D approach may still be a good candidate for allowing complex and versatile vision systems for a wide variety of vehicles. Most likely it will be coupled with modern radar systems for better performance under all weather conditions. The development of this type of combined vision/radar perception systems surpassing the performance level of human individuals may take decades or even this full century.

References

[7.1] Friston, K. J. and K. Stephan (2007). Free energy and the brain. Synthese 159(3): 417-458.

[7.2] Hohwy, J. (2009). The hypothesis testing brain: some philosophical applications. In ASCS09: Proc. of the 9th Conf. of the Australasian Society for Cognitive Science. Edited by Wayne Christensen, Elizabeth Schier, and John Sutton. Sydney: Macquarie Centre for Cognitive Science,

[7.3] Friston, K. (2010). The free-energy principle: A unified brain theory? Nature Reviews Neuroscience, 11(2), 127–138.

[7.4] Hohwy, J. (2013). The predictive mind. Oxford: Oxford University Press.

[7.5] Menary, R. (2015b). What? Now: Predictive Coding and Enculturation. In T. Metzinger & J. M. Windt (Eds.), Open MIND. Frankfurt am Main: MIND Group.

[7.6] Menary, R. (2016). Pragmatism and the pragmatic turn in cognitive science. In D. Engel, Andreas K., Friston, K., & Kragic (Ed.), Where is the action? The pragmatic turn in cognitive science (pp. 219–237). Cambridge, Mass: MIT Press.

[7.7] Fabry, R. E. (2017). Predictive processing and cognitive development. In T. Metzinger & W. Wiese (Eds.) Philosophy and predictive processing.

[7.8] Roland A. and Shiman P. (2002). Strategic Computing:DARPA and the Quest for Machine Intelligence,1983-1993 (History of Computing), MIT Press, 2002.

[7.9] Dickmanns E. D. (2017). Developing the Sense of Vision for Autonomous Road Vehicles at the UniBwM. IEEE-Computer, Dec. 2017. Vol. 50, no. 12. Special Issue: SELF-DRIVING CARS, pp. 24 – 31