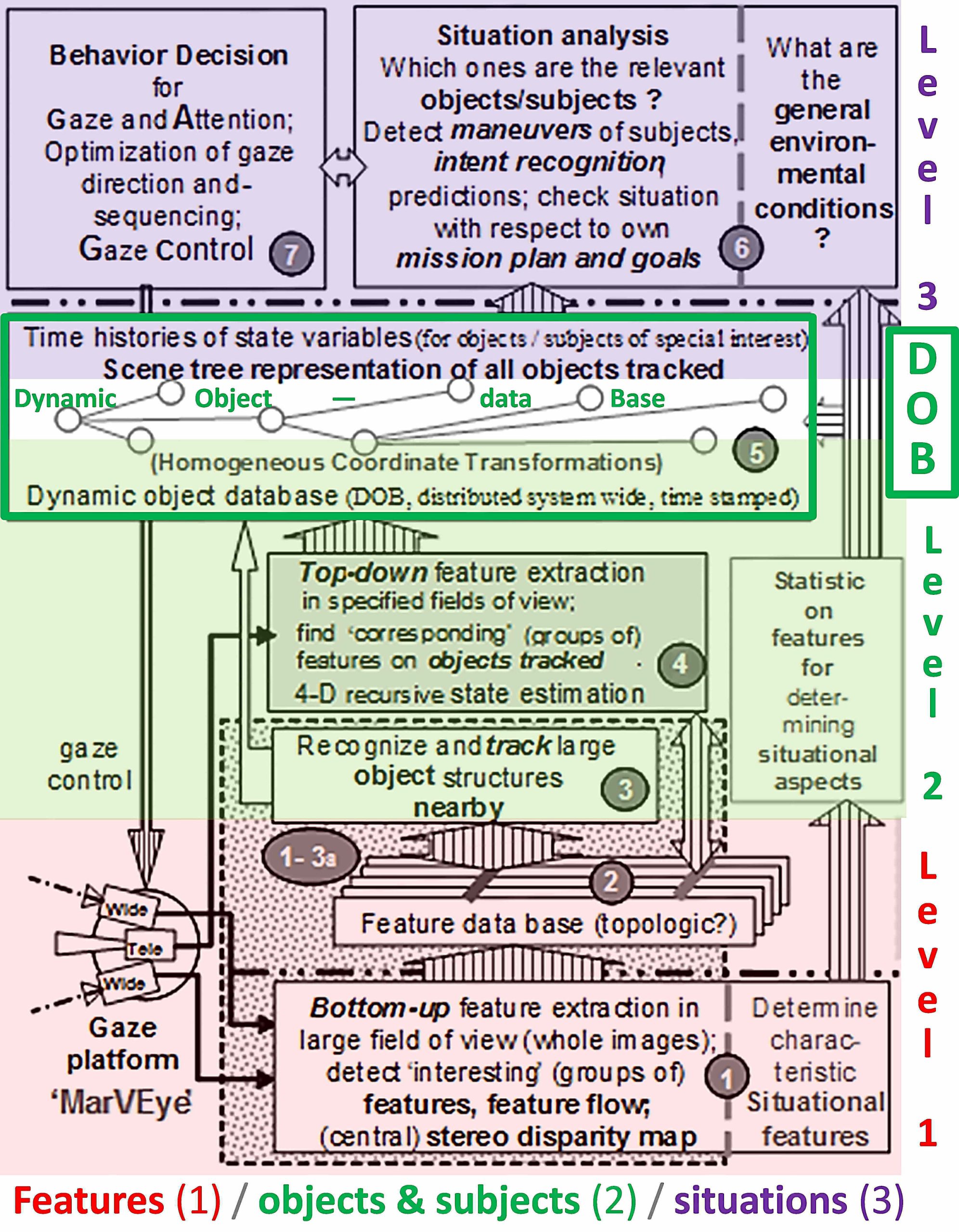

A first coarse structuring, taking the overall task (mission context) and the specific hardware of the vision system into account, is done into three levels:

Level 1.

Bottom-up feature extraction in predefined image areas with standard algorithms; some grouping of features may already be done on this level. This level profits most from parallel processing with high data rates; word length is not critical at this stage, but high communication bandwidth is important. Special subsystems or boards may constitute a good solution for this task.

Level 2.

Top down feature extraction with feedback from an object knowledge base for intelligent control of this process has to be geared to classes of objects and their potential visual appearance in images under a variety of conditions. This knowledge is domain dependent both with respect to shape and likely aspect conditions as well as to likely and potential motion over time. On this level (2) visual interpretation is not done just in the actual image (as on level 1) but taking spatio-temporal sequences of images as well as motion processes and noise statistics into account. Knowledge about 3-D shape, characteristic motion capabilities and perspective mapping is exploited to understand shape and motion simultaneously while trying to fit stored generic models both for shape and motion to the motion process of a single object observed. If tracking errors are sufficiently small, the objects are published to the rest of the system in the “Dynamic Object data-Base (DOB)”. Here, also time histories of state variables may be accumulated.

Level 3.

On the third level, only object data are treated; this reduces the data rate per object perceived by two to three orders of magnitude, so that handling of many objects in parallel becomes possible. Depending on the kind of objects and their relative state, the most relevant objects for mission performance are selected. The own mission goal and trajectory planned, together with expected motion or behavior of the relevant objects determine own behavior decision, whether to continue the behavioral mode running or performing a switch in behavior.