For the first two decades in dynamic vision (1980’s and 90’s), efficient extraction of oriented edges with adjacent regions of gray values was the predominant method used. The idea of [Kuhnert 1988], based on the Prewitt operator for gradient computation, was developed into a very efficient real-time code by [Mysliwetz 1990] in FORTRAN. With translations into the transputer language ‘Occam’ (dubbed ‘KRONOS’) [Dickmanns D 1997] and by S. Fuerst into the programming language ‘C’ (dubbed ‘CRONOS’), each time with some generalizations, this mature code became the standard workhorse in connection with the 4-D approach to dynamic vision using prediction error feedback.

With the increase in computing power around the turn of the century also region-based features have been used [Hofmann 2004]; this also paved the way for a new corner feature extractor [Dickmanns 2006 and 2008].

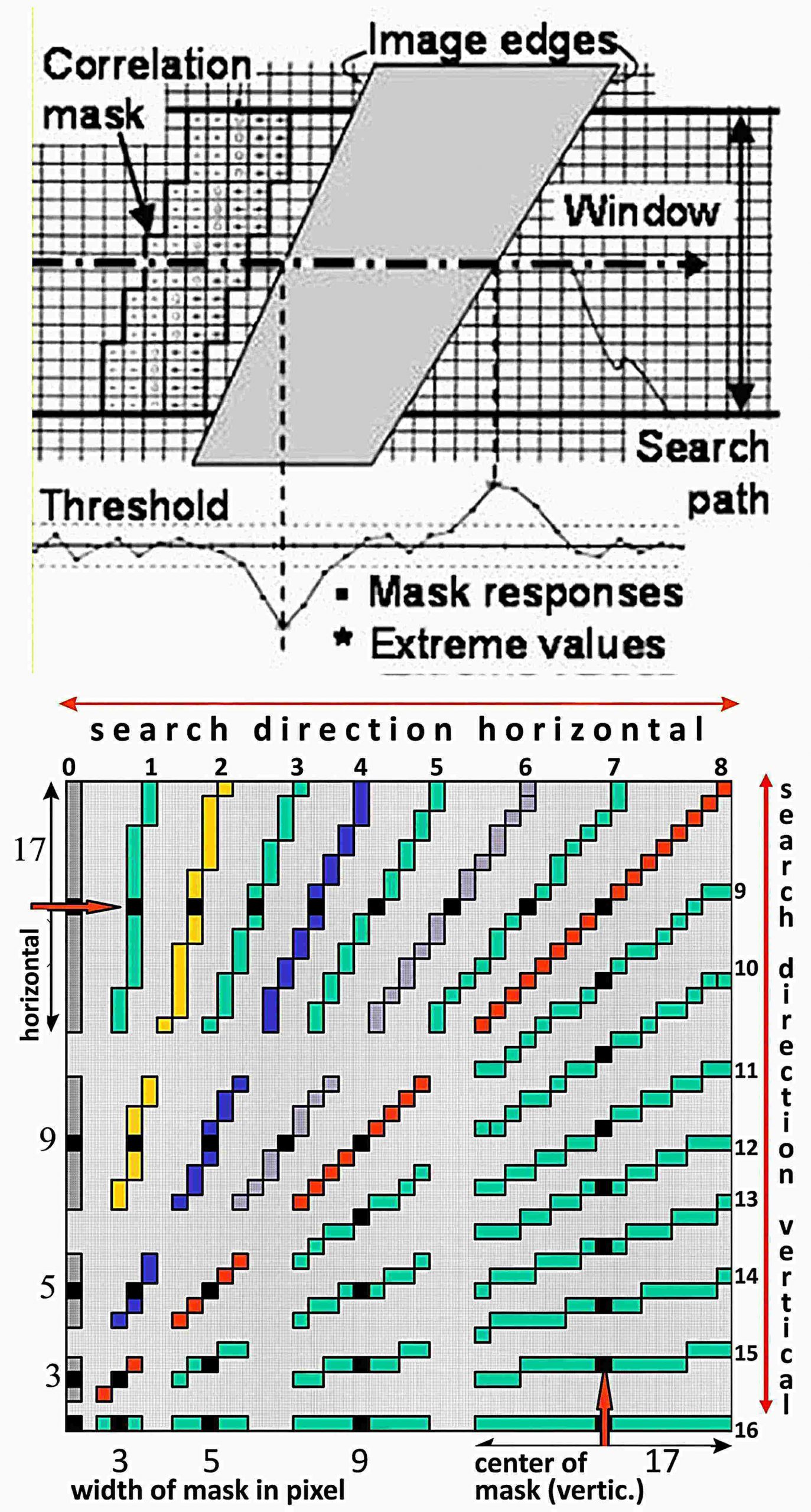

Edge extraction with oriented masks

- ‘+’ or ‘–‘ signs within the mask (left) stand for the weights for summing pixels in the subfield (nw ∙ nd): +1 or -1;

- summing first for all possible locations in the search range all pixels forming an orientation at one pixel location (see gray pixels in lower figure for mask widths from 3 to 17), allows reducing mask formation to a vector instead of a matrix, independent of mask width (central black value). This corresponds to low-pass filtering in the expected edge direction.

- Four mask parameters: width of window nw, width of ‘+’ or ‘–‘ subfield nd, width of 0-central region n0, angular orientation, and the search range can be changed within one video cycle.

- By parabolic interpolation around an extreme mask response, edge localization can be done to subpixel accuracy (graph top left below upper pixel grid).

- The average intensity value in one subfield (nw ∙ nd) is the second output.

- The parameterization of the mask and the search range make the method ideally suited for expectation based image sequence evaluation around multiple locations in parallel.

- Nonetheless, appropriately defined masks may also be used for standard feature extraction over larger image regions or the entire image. Vertical or horizontal edges are obtained by summing pixels just in one column or row.

- Search direction is switched for orientations around 45 degrees (with a hysteresis) from horizontal to vertical (or vice versa).

References

Mysliwetz B, Dickmanns ED (1986). A Vision System with Active Gaze Control for real-time Interpretation of Well Structured Dynamic Scenes. In: Hertzberger LO (ed) (1986) Proceedings of the First Conference on Intelligent Autonomous Systems (IAS-1), Amsterdam, pp 477–483

Kuhnert KD (1988). Zur Echtzeit-Bildfolgenanalyse mit Vorwissen. Dissertation, UniBwM / LRT

Mysliwetz B (1990). Parallelrechner-basierte Bildfolgen-Interpretation zur autonomen Fahrzeugsteuerung. Dissertation, UniBwM / LRT. Kurzfassung

Dickmanns D (1997). Rahmensystem für visuelle Wahrnehmung veränderlicher Szenen durch Computer. Dissertation, UniBwM, INF. Also: Shaker Verlag, Aachen, 1998. Zusammenfassung

Dickmanns ED (2007). Dynamic Vision for Perception and Control of Motion. Springer, London, (Section 5.2) Content

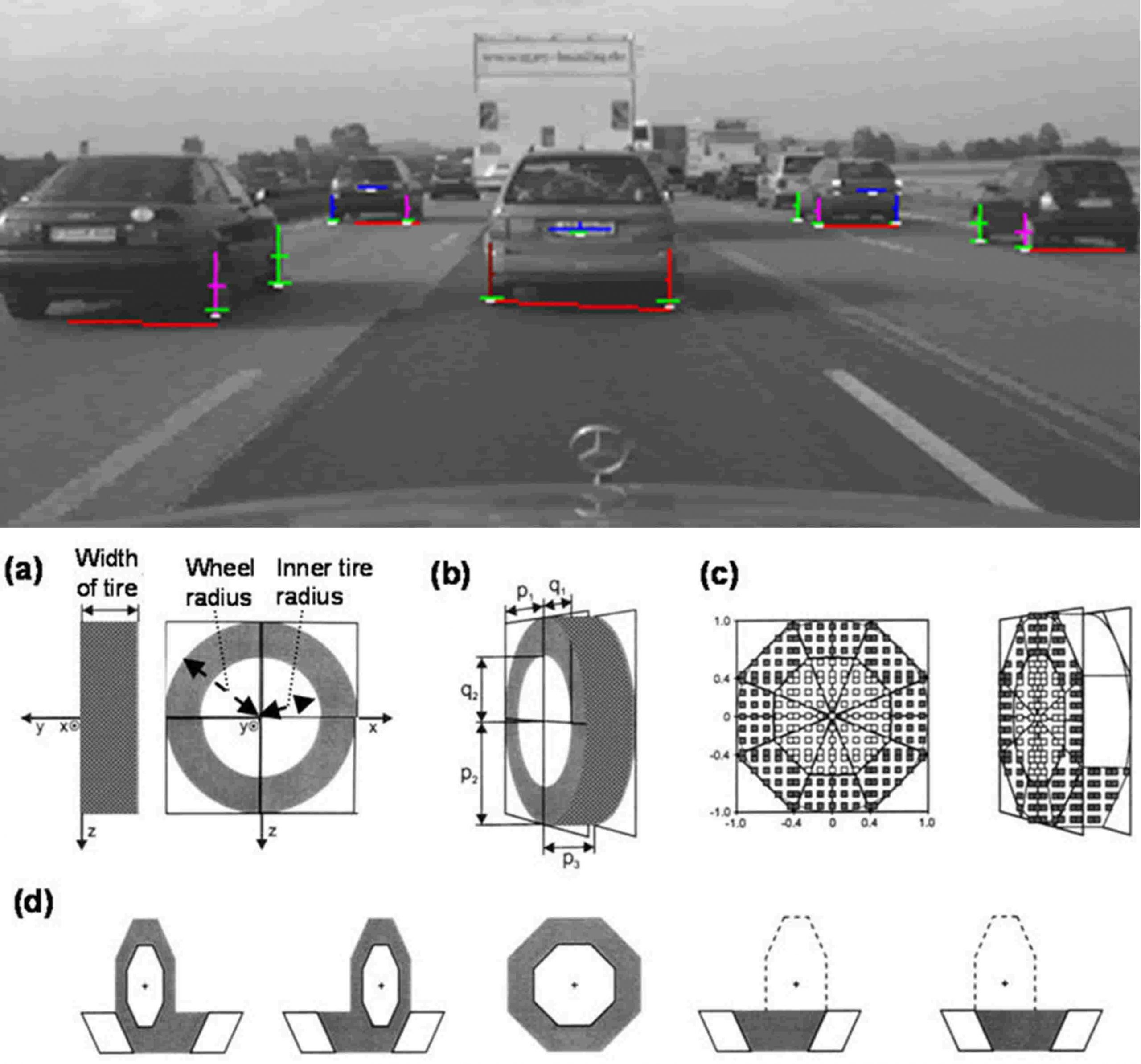

Region-based features

Check planarity of intensity distribution in a rectangular mask by differencing the diagonal and counter-diagonal sums of the mask elements (mels) => ε :

|ε| > thresh => nonplanar

- Determine averaged horizontal and vertical intensity gradients from grouped mels as shown in the top part of the figure. The optimal size of mels depends on the problem to be solved. Determine gradient magnitude and -direction; merge areas with similar parameters.

- Shift mask in row direction with (adaptable) step size δy; determine points with extreme gradient values => edge elements (green edges in figure; red for vertical search).

- After finishing the row, shift mask in column direction by one mel-width and repeat procedure.

The lower picture to the right has been reconstructed purely from feature data extracted (no original image data are shown):

- Linearly shaded intensity regions (in gray),

- regions with nonplanar intensity distribution (white),

- edges detected in row search (green),

- edges detected in column search (red),

- corners determined in nonplanar regions (blue crosses).

Wheel recognition under oblique angles with special masks

has been investigated by [Hofmann 2004]

(see section A.3.6.2c).

References

Hofmann U (2004). Zur visuellen Umfeldwahrnehmung autonomer Fahrzeuge. Dissertation, UniBwM / LRT. Kurzfassung

Dickmanns ED, Wuensche HJ (2006). Nonplanarity and efficient multiple feature extraction. Proc. Int. Conf. on Vision and Applications (Visapp), Setubal, (8 pages) pdf

Dickmanns ED (2007). Dynamic Vision for Perception and Control of Motion. Springer-Verlag (Section 5.3) Content

Dickmanns ED (2008). Corner Detection with Minimal Effort on Multiple Scales. Proc. Int. Conf. on Vision and Applications (Visapp), Madeira, (8 pages) pdf