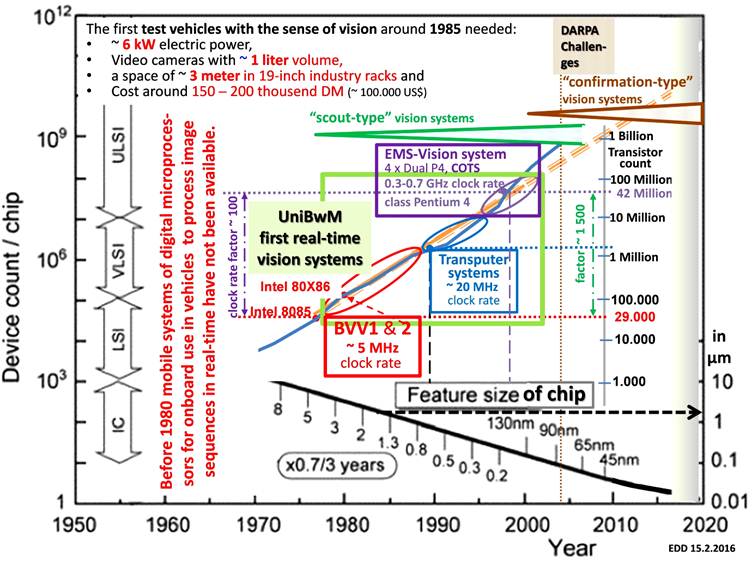

The following figure gives background information on the state of development of digital microprocessor hardware towards the end of last century. Before 1980, mobile digital real-time vision systems were simply impossible. Our custom-designed “window-system BVV 1” working on half-frames (video fields) at ~ 10 Hz (360 x 120 pixels) in 1981 had a clock rate of ~ 5 MHz. The 2-nd-generation systems based on ‘transputers’ in the first half of the 1990s with 4 direct links to neighboring processors worked with around 20 MHz clock rate. Towards the end of the 1990s our 3-rd-generation system based on Pentium-4, dubbed “Expectation-based, Multi-focal, Saccadic” (or in short EMS-) vision system approached a clock rate of 1 GHz (see center of the following figure within the green rectangle).

In the same time span of two decades the transistor count per microprocessor increased by a factor of ~ 1500 (center right in figure). The feature size on chips decreased by about two orders of magnitude (see lower right corner). This has led to the fact that the physical size of vision systems (characterized by the four bullets in the upper left corner) could be shrunk to fit into even small passenger cars.

Our vision systems did not need precise localization by other means or by highly precise maps. Due to perception of the environment by differential-geometry-models they were able to recognize objects in the environment sufficiently precise by feedback of prediction errors (dubbed “scout-type vision” here, see green angle in upper center of the figure). A new type of vision system has developed with the DARPA-Challenges 2002 till 2007 ( see brown angle in upper right corner of the figure): Each Challenge consisted of performing maintenance missions in well explored and prepared environments. The main sensor used was a revolving laser-range finder on top of the vehicles that provided (non-synchronized) distance images for the near range (~ 60 m) ten times per second. After synchronization and error correction nice 3-D displays could be generated. For easy interpretation both highly precise maps with stationary objects and precise localization of the own position by GPS-sensors (supported by inertial and odometer signals) were required. Some groups have demonstrated mission performance without any video signal interpretation. Because of the heavy dependence on previously collected information on the environment, this approach is dubbed “confirmation-type vision” here (brown angle). The Google systems developed later and several other approaches world-wide followed this bandwagon track over more than a decade, though a need for more conventional vision with cameras has soon been appreciated and is on its way. Finally, computing power and sensors available in the not too far future will allow a merger of both approaches for flexible and efficient use in various environments.On rough ground and for special missions (e.g. exploratory, cross-country ralleys, military) active gaze control with a central field of view with high-resolution may become necessary. Vision systems allowing the complexity required have been discussed in [79 content chapter 12; 84; 85 pdf; 88c) pdf]

At UniBw Munich towards the end of the 1990’s a new branch of advanced studies beside Aero-Space Engineering (Luft- und Raumfahrttechnik, LRT) has been proposed in the framework of increasing the number of options for students in the second half of their studies: “Technology of Autonomous Systems” (Technik Autonomer Systeme, TAS), see page 123 of [63 Cover and content].A specific institute with the identical name has been founded early in the first decade of the new century; the corresponding head of these activities has been called in 2006: The former PhD-student Hans-Joachim Wuensche [D3 Kurzfassung] who had gained long industrial experience on all levels in the meantime.

He has built up the institute TAS and continues top level research in autonomous driving for ground vehicles. Details may be found on the Website