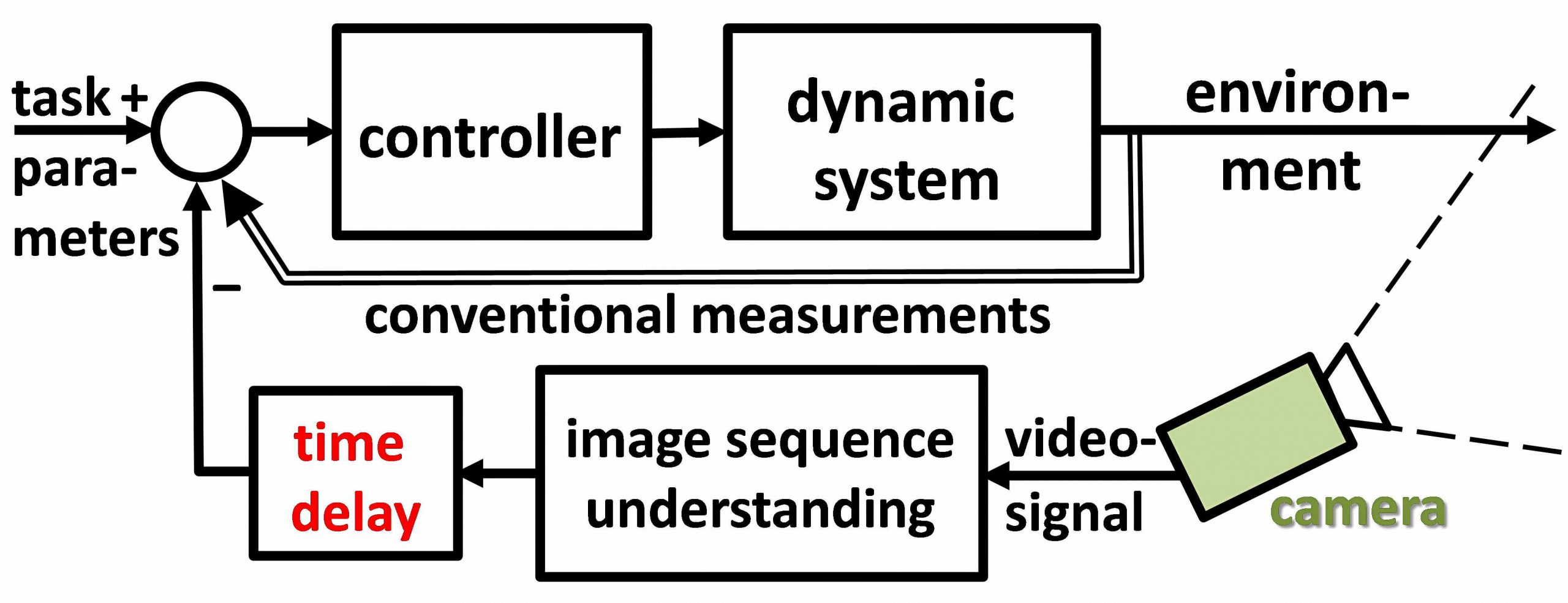

M.2.1. State feedback control (‘fb’)

Beside conventional feedback signals proven in the task domain, also signals derived from vision are used to compute the overall feedback control signals; the time delay occurring in the visual path has to be taken into account. The 4-D approach to dynamic vision directly provides the state variables needed for state feedback. Typical: Road running, distance keeping.

Integrated terms may be included in the controller for improving static accuracy (e.g. lateral position in the lane).

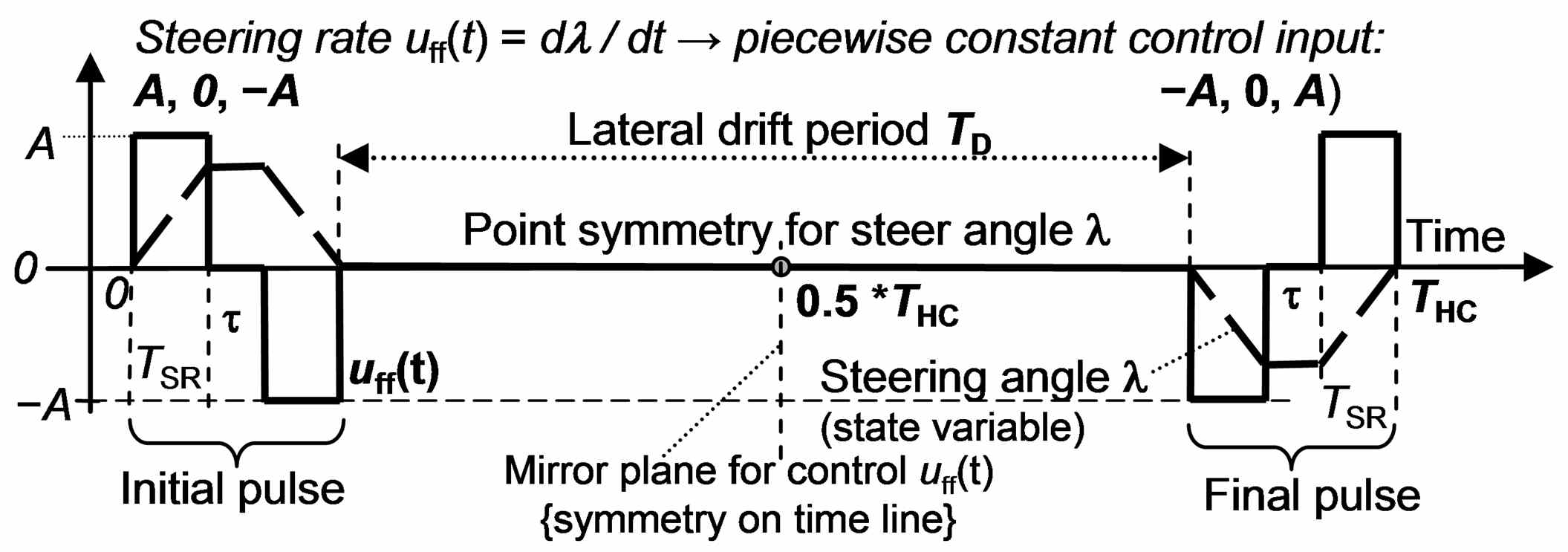

M.2.2. Feed-forward control for ‘maneuvers’ (‘ff’)

When trigger conditions are met, maneuvers are initiated by starting a parameterized control time history output which is known to transfer the system from its estimated initial state into a desired final state. (No path planning in the individual case; the optimal maneuver is ‘known’ as a skill.)

Typical: Lane change, curves, turn-off, sudden stop; parameters have to be adapted to the situation given (lane width, speed etc.).

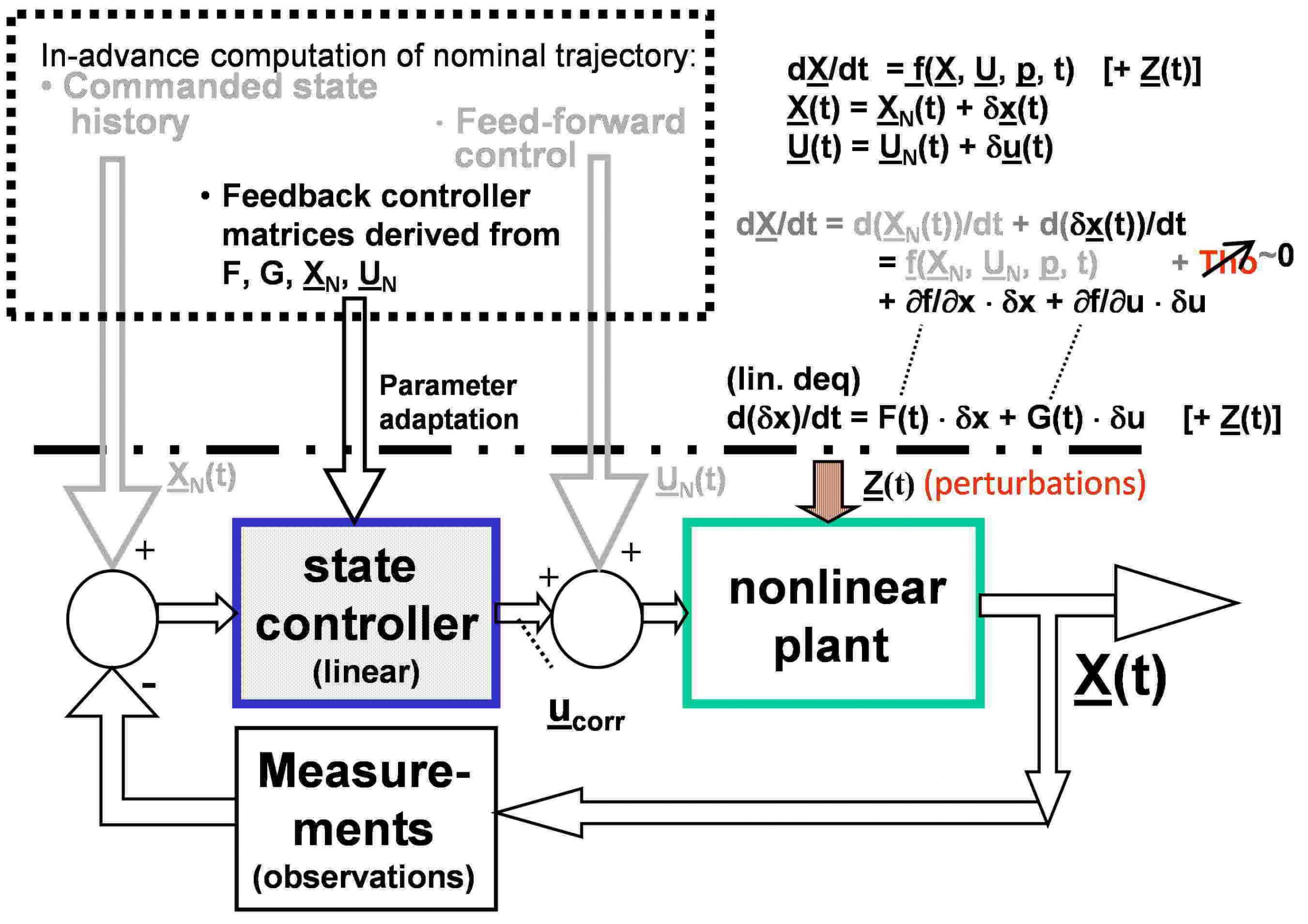

M.2.3. Superposition of feed-forward and feedback for real-world situations:

- Nonlinear systems are decomposed into a nominal nonlinear feed-forward control task for which optimal solutions can be pre-computed by optimal control theory (calculus of variation).

- To handle deviations from the nominal model caused by perturbations during the actual maneuver, the parameters of a (time varying) linear system are also computed that allow

- applying linear feedback control theory to counteract these deviations in the actual case. It depends on the task at hand whether the feedback component is applied right from the beginning or phased in towards the end of a maneuver.

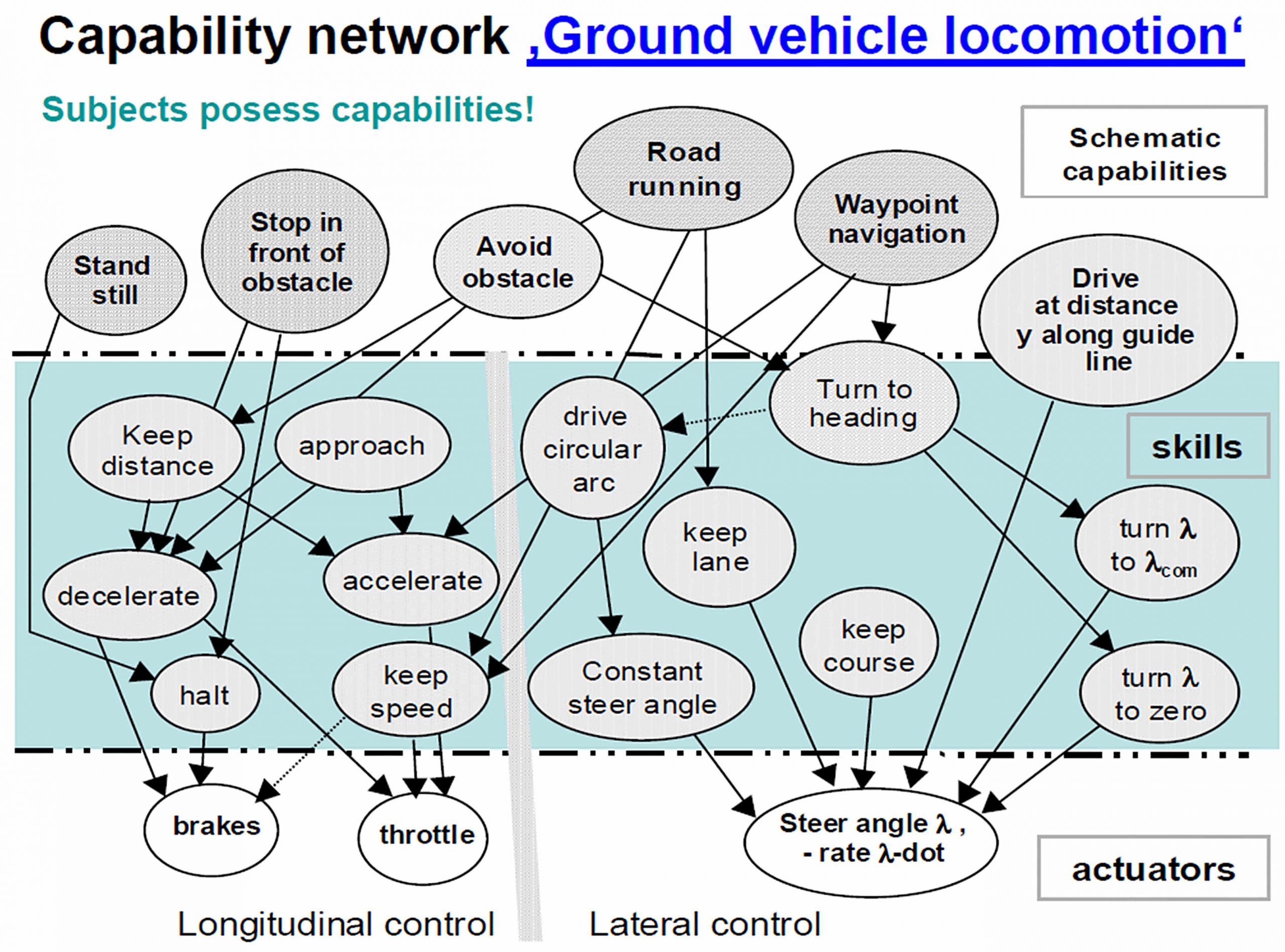

M.2.4. Capability network for locomotion

- All schematic capabilities (top level) are realized via skills (medium level) that need actuators for physical realization (bottom level).

- When a schematic capability is intended for application, the actual availability of all lower capabilities needed is checked; this includes proper functioning of the actuators.

- Developing the realization of such a network in a real vehicle is rather involved; especially those capabilities needing both longitudinal (left part) and lateral control (part at right) require careful timing of trigger points, gain balancing, and monitoring of temporal progress compared to the nominal case.

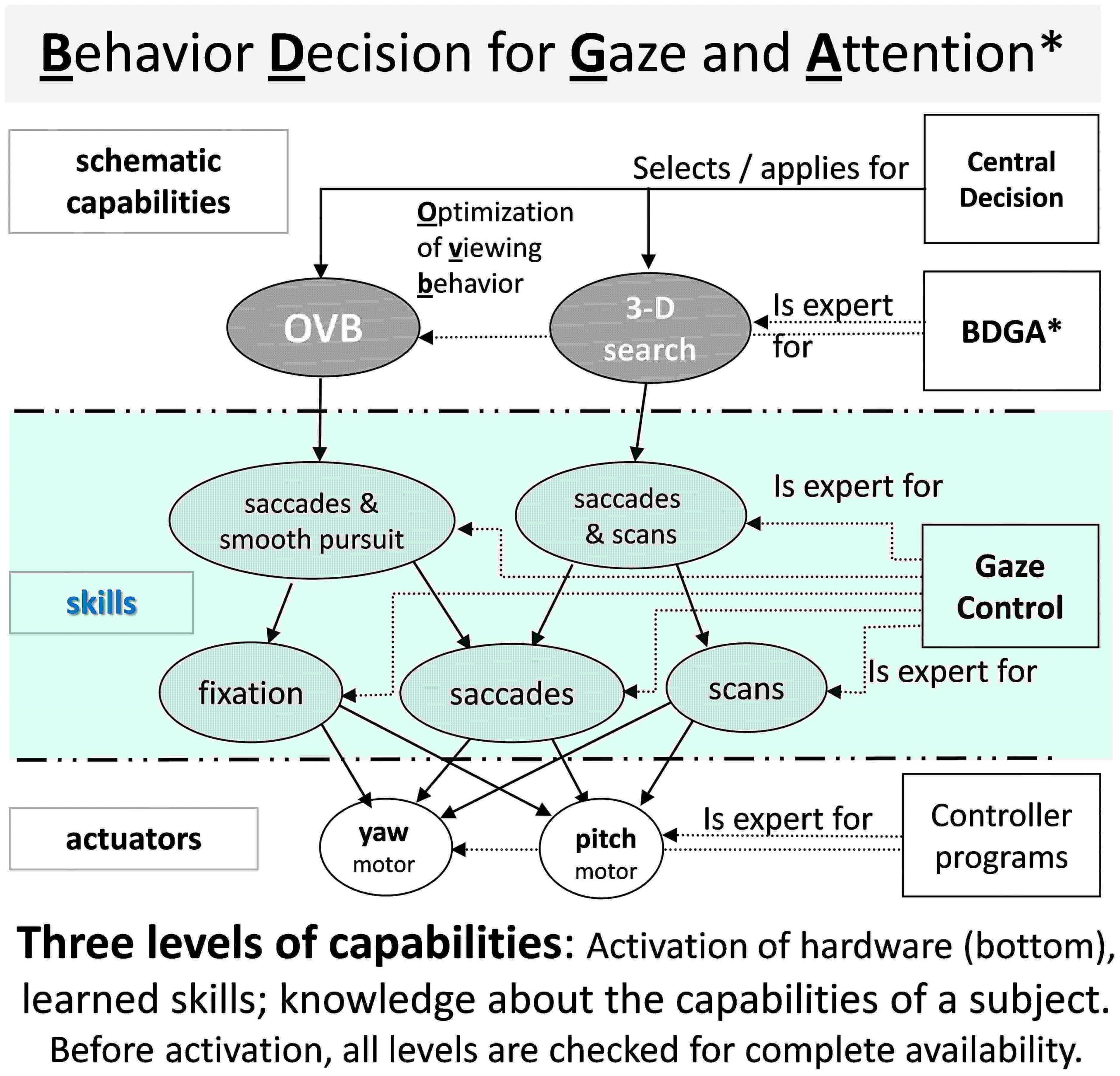

M.2.5. Capability network for gaze and attention

All behavioral patterns boil down to temporal activation of two actuators, the yaw (pan) and the pitch (tilt) motor for gaze control.

There are three basic skills:

- scans (change gaze direction at constant speed),

- fast saccades for quick change of the gaze direction,

- and fixation on sets of visual features (objects).

All other skills and schematic capabilities are based on these basic skills by proper triggering and transitions between them.

References

Schiehlen J (1995). Kameraplattformen für aktiv sehende Fahrzeuge. Dissertation, UniBwM, LRT Kurzfassung

Dickmanns ED (2000). An Expectation-based, Multi-focal, Saccadic (EMS) Vision System for Vehicle Guidance. In Hollerbach and Koditschek (eds.): ‚Robotics Research‘ (The Ninth Symposium), Springer-Verlag Extended_abstract

Gregor R, Lützeler M, Pellkofer M, Siedersberger KH, Dickmanns ED (2000). EMS-Vision: A Perceptual System for Autonomous Vehicles. Proc. Int. Symposium on Intelligent Vehicles (IV’2000), Dearborn, (MI) pdf

Lützeler M, Dickmanns ED (2000). EMS-Vision: Recognition of Intersections on Unmarked Road Networks. Proc. Int. Symp. on Intelligent Vehicles (IV’2000), Dearborn, (MI) , Oct. 4-5, pdf

Maurer M (2000). Knowledge Representation for Flexible Automation of Land Vehicles. Proc. Int. Symp. on Intelligent Vehicles (IV’2000), Dearborn, (MI)

Pellkofer M, Dickmanns ED (2000). EMS-Vision: Gaze Control in Autonomous Vehicles. Proc. Int. Symp. on Intelligent Vehicles (IV’2000), Dearborn, (MI) pdf

Siedersberger K-H, Dickmanns ED (2000). EMS-Vision: Enhanced Abilities for Locomotion. Proc. Int. Symp. on Intelligent Vehicles (IV’2000), Dearborn, (MI) pdf

Gregor R, Lützeler M, Dickmanns ED (2001). EMS-Vision: Combining on- and off-road driving. Proc. SPIE Conf. on Unmanned Ground Vehicle Technology III, AeroSense ‘01, Orlando (FL), Abstract (and part of Introd.)

Gregor R, Lützeler M, Pellkofer M, Siedersberger K-H, Dickmanns ED (2001). A Vision System for Autonomous Ground Vehicles with a Wide Range of Maneuvering Capabilities. Proc. ICVS, Vancouver

Pellkofer M, Lützeler M, Dickmanns ED (2001). Interaction of Perception and Gaze Control in Autonomous Vehicles. Proc. SPIE: Intelligent Robots and Computer Vision XX; Newton, USA, pp 1-12

Gregor R, Lützeler M, Pellkofer M, Siedersberger KH, Dickmanns ED (2002). EMS-Vision: A Perceptual System for Autonomous Vehicles. IEEE Trans. on Intelligent Transportation Systems, Vol.3, No.1, pp. 48 – 59

Gregor R (2002). Fähigkeiten zur Missionsdurchführung und Landmarkennavigation. Dissertation, UniBwM / LRT. Kurzfassung

Pellkofer M, Hofmann U, Dickmanns ED (2003). Autonomous cross-country driving using active vision. SPIE Conf. 5267, Intelligent Robots and Computer Vision XXI: Algorithms, Techniques, and Active Vision. Photonics East, Providence, Rhode Island

Pellkofer M (2003). Verhaltensentscheidung für autonome Fahrzeuge mit Blickrichtungssteuerung. Dissertation, UniBwM, LRT. Kurzfassung

Siedersberger K-H (2004). Komponenten zur automatischen Fahrzeugführung in sehenden (semi-) autonomen Fahrzeugen. Dissertation, UniBwM, LRT, Kurzfassung

Dickmanns ED (2007). Dynamic Vision for Perception and Control of Motion. Springer-Verlag, London. Content