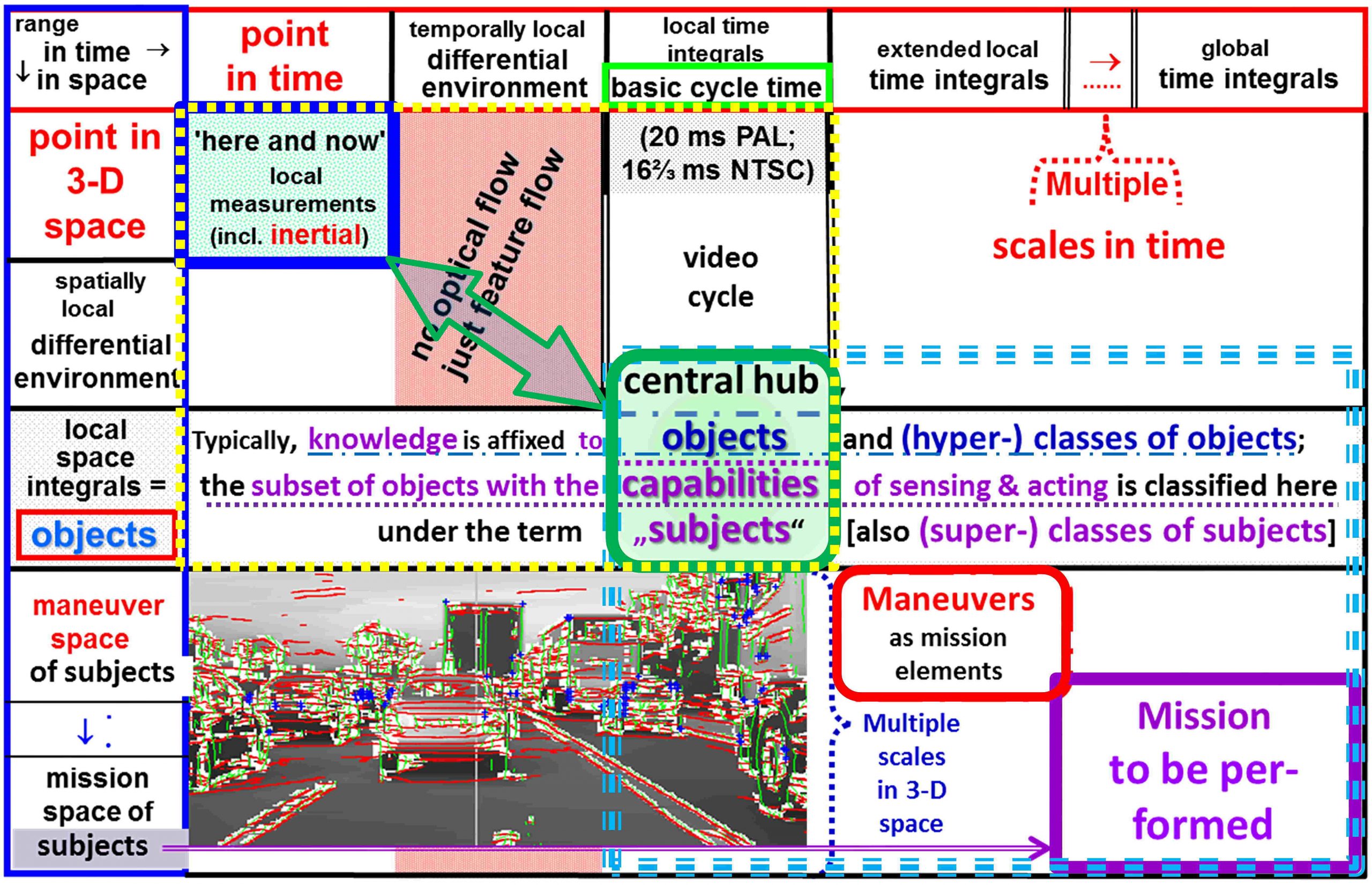

The table visualizes the dimensions for cognitive dynamic scene understanding:

It shows time in horizontal direction from the point ‘now’ (second column from left) over the local time integral (one video cycle, column 4) to larger times depending on the overall mission or task (right).

3-D space in vertical direction is similarly scaled from the point ‘here’ (second row from the top) over its differential environment (row 3) to the spatial extension of a single object (row 4) and to larger spaces depending on the overall mission or task (bottom right):

- Time derivatives (column 3) are deliberately avoided in the 4-D approach (in recursive estimation in general) since differentiation leads to noise amplification for higher frequencies [d/dt (A ∙ sin(ωt) = A ∙ ω ∙ cos(ωt)]. Instead, ‘expectations’ are computed by predicting states and measurements for the next point in time (through smoothing integration of the cognitive dynamic model). Then, the errors between values predicted and those measured are computed; incrementing predicted object states in direction of the measured features (via ‘Jacobian elements’ [Dickmanns, Wuensche 1999; Dickmanns 2007]) avoids noise amplification and yields a least squares fit according to the model; for system elements of higher order, the derivatives are reconstructed automatically by the recursive fit.

- In the two image dimensions, differential changes of edge directions (row 3) can directly be measured and are important elements for 3-D shape interpretation (curvature) of objects; shape description in differential terms is more compact than in Cartesian coordinates (e.g. roads described by clothoid elements do not need the integration constants for pose).

- Combining shape- and motion interpretation (row 4, yellow rectangle with column 4) allows spatio-temporal (4-D) reconstruction of n moving objects in parallel [‘central hub’, element (4, 4)]. These objects constitute the base for situation assessment and decision making for mission accomplishment (blue rectangle, lower right).

- In conjunction, the scene including the depth dimension, lost in perspective projection for a single image, can be perceived over time (motion stereo). In connection with the own intentions, this yields the situation to be taken into account for intelligent decision making.

- The mission to be performed determines the total temporal and spatial scales to be taken into account.

References

Dickmanns ED (1997). Vehicles Capable of Dynamic Vision. Proc. 15th International Joint Conference on Artificial Intelligence (IJCAI-97), Vol. 2, Nagoya, Japan, pp 1577-1592. Abstract (with Introduction)

Dickmanns ED, Wuensche HJ (1999). Dynamic Vision for Perception and Control of Motion. In: B. Jaehne, H. Haußenecker and P. Geißler (eds.) Handbook of Computer Vision and Applications, Vol. 3, Academic Press, pp 569-620. Content and Introduction

Dickmanns ED (2007). Dynamic Vision for Perception and Control of Motion. Springer-Verlag, London. Content