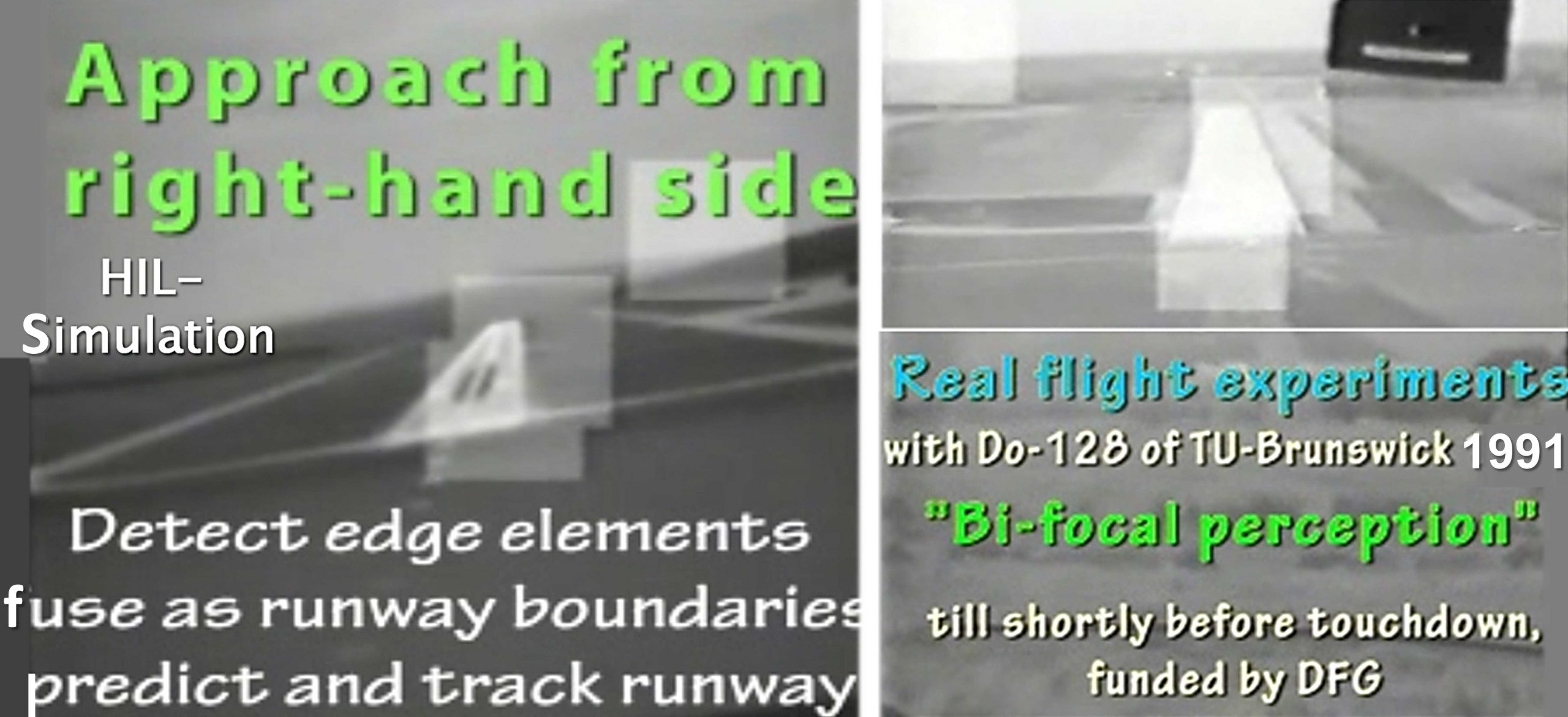

Since this task is very demanding even for trained pilots, it seemed very unlikely in the early 1980’s that external funding could be obtained for developing a machine vision system that is capable of handling this task autonomously. Therefore, the first round of basic research was done on internal funding with the Hardware-In-the-Loop (HIL) simulation facility

H.4.2 HIL-Sim AirVehGuidance (1982 – 1987).

After solid foundations based on the 4-D approach to dynamic machine vision with video demonstrations had been achieved, the second round of development towards practically useful systems has been funded by the German Science Foundation (DFG, 1987 – 1992). For flight guidance in landing approach there is no way around the full six degrees of freedom formulation with second-order dynamics in each of them; that means, there are 12 state variables for describing the process. Aircrafts have four control inputs: throttle, elevator, aileron and rudder, the first two of which control motion in the plane of symmetry (two translations and one rotation). Ailerons are designed to generate rotation around the longitudinal axis, but they also generate some motion around the vertical axis, usually. Similarly, the rudder is designed to generate torque around the vertical axis, but has some effect also on rotation around the longitudinal axis and on lateral acceleration. In landing approach, several kinds of flaps may be set in addition for generating more lift and for allowing slower speed for touch down. Control laws for automatic landing with microwave systems or with GPS signals taking inertial sensor data into account are well known. Exploiting human capabilities in visual feedback and conventional control techniques for automatic landing, autonomous landings by machine vision were considered to be in reach. Using the knowledge captured in dynamic models for simulation and flight analysis is mandatory for success. The 4-D approach integrates all these components right from the beginning.

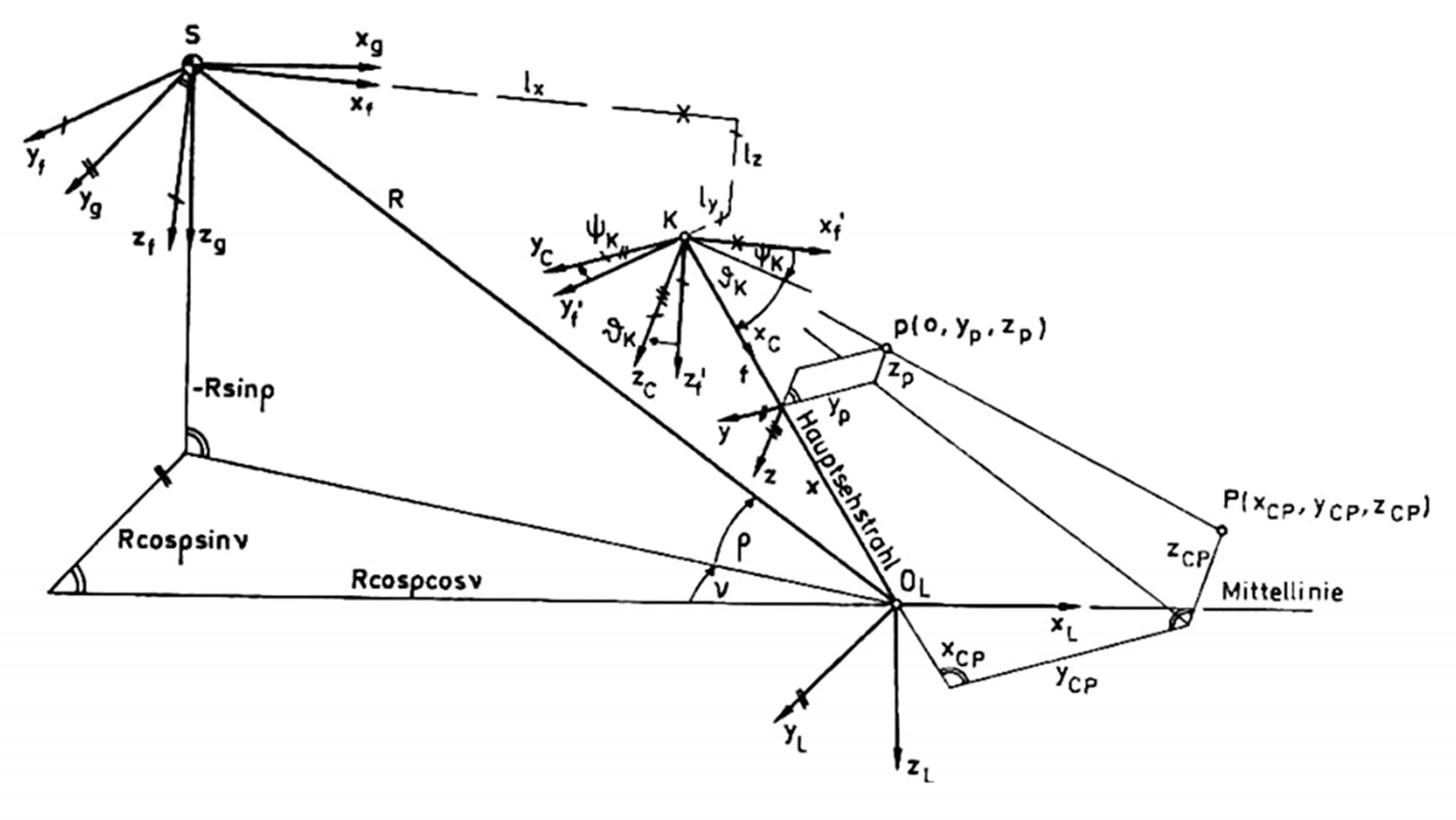

G. Eberl did the first formulation of the 4-D approach for aircraft landing by computer vision (1982 – 1987) based on the standardized terminology for flight applications [LN 9300]:

Easily scalable coordinates including transformations by 4×4 Homogeneous coordinate Transformation Matrices (HTM), developed in the field of computer graphics, have been used right from the beginning.

HIL- simulation was a big help in setting up and testing the basic closed loop vision system with real edge feature extraction (without inertial sensing) by H.1.1 FirstGenerationBVVx .

A gaze controllable (active) camera was used that fixated the runway, counteracting aircraft motion in pitch and yaw (see upper part of figure).

The block diagram (left) shows the very simple beginnings in 1982 with four evaluation windows on edges of the landing strip and on the horizon.

Only with the ‘4-D approach’ introducing physically correct spatio-temporal models a solution was possible.

References

Eberl G (1987). Automatischer Landeanflug durch Rechnersehen. Dissertation, UniBwM / LRT. Kurzfassung

Dickmanns ED (1988). Computer Vision for Flight Vehicles. Zeitschrift für Flugwissenschaft und Weltraumforschung (ZFW), Vol. 12 (88), pp 71-79. pdf (text excerpts)

First HIL-simulation results for landing approach

- Calligraphic grayscale computer image generation;

- initially, a single camera has been used;

- All hardware for real-time visual perception was included in the closed simulation loop.

- The figure shows a sequence of snapshots (1a, b, c, 2a, b, c, 3a, b, c) during approach till touch down.

This set-up did not allow handling simulated larger disturbances by crosswinds or gusts.

Combined visual-inertial sensing

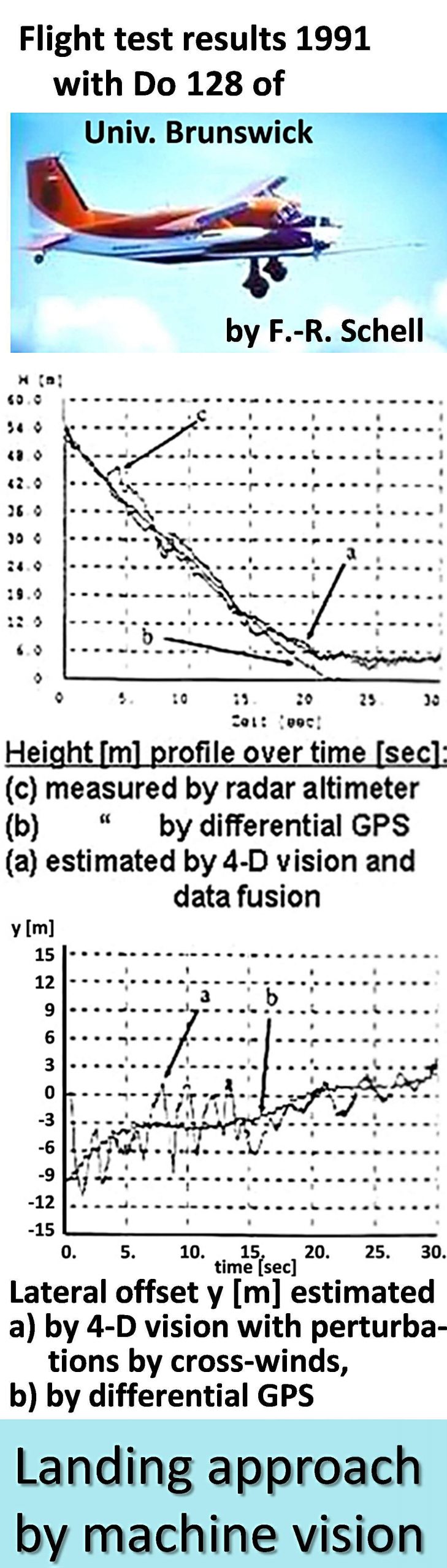

F.-R. Schell (1987 – 1992) introduced real inertial sensors in the simulation loop; this was an essential step, since their data have very small delay times (a few milliseconds (ms)) compared to visual perception (a few hundred ms, like in the vestibular / ocular interaction in vertebrate systems developed by Nature). He developed visual / inertial data fusion in a hybrid approach. Real inertial sensors allow

- picking up the relatively high-frequency perturbations on the own body (and the cameras, their own motion from the pointing platform added) with typical noise characteristics and integrating them to angular pose;

- own body and camera states thus obtained were used for visual interpretation (taking different delay times into account).

- Low-frequency inertial drifts can be compensated by visual feedback from feature sets stemming from objects sufficiently far away (for example the horizon line).

The hybrid solution could not only be verified in simulation including perturbations by gusts and crosswinds with full control application till touch-down, but also in real flight experiments. However, for safety reasons the real flights with a twin-propeller aircraft Do 128 of the University of Brunswick were done in 1991 without control implementation, i.e. the perception part only, and without final touch down; these flight experiments were funded by the German Science Foundation DFG, (see photo and graphs on the left).

Video 1982-92 Aircraft Landing Approach.mp4

Contrary to Global Positioning Systems (like GPS) as additionally available devices nowadays, the autonomous sense of vision also allows detecting obstacles on the runway and is independent of availability of GPS [Fuerst et al. 1997]. It also can serve as additional device for taxiing on airports. On top of this, landmark navigation and fixation of objects of special interest (other vehicles in the air or constructions of special importance on the ground) may be valuable inputs to safety and mission performance. These topics have been investigated in the framework of helicopter guidance by machine vision (next subsection).

References

LN 9300 (1970). Luftfahrtnorm 9300 zur Flugmechanik. Anhang, Beuth-Vertrieb GmbH, Koeln

Schell FR (1992). Bordautonomer automatischer Landeanflug aufgrund bildhafter und inertialer Meßdatenauswertung, Dissertation, UniBwM / LRT. Kurzfassung

Schell FR (1992). Computer Vision for Autonomous Flight Guidance and Landing, IFAC Symposium Aerospace Control ’92, Ottobrunn

Schell FR, Dickmanns ED (1992). Autonomous Landing of Airplanes by Dynamic Machine Vision. Proc. IEEE-Workshop on ‚Applications of Computer Vision‘, Palm Springs,

Fürst S, Werner S, Dickmanns D, Dickmanns ED (1997). Landmark navigation and autonomous landing approach with obstacle detection for aircraft. AeroSense ’97, SPIE Proc. Vol. 3088, Orlando FL, April 20-25, pp 94-105.

Fürst S, Werner S, Dickmanns ED (1997). Autonomous Landmark Navigation and Landing Approach with Obstacle Detection for Aircraft. 10th European Aerospace Conference ‘Free Flight’, Amsterdam, NL, pp 36.1 – 36.11

Fürst S., Werner S., Dickmanns D.; Dickmanns E.D. (1997): Landmark Navigation and Autonomous Landing Approach with Obstacle Detection for Aircraft. AGARD MSP Symp. On System Design Considerations for Unmanned Tactical Aircraft (UTA), Athens, Greece, October 7-9, pp 20-1 – 20-11 pdf