H.4.2a Landing approaches with vector graphics

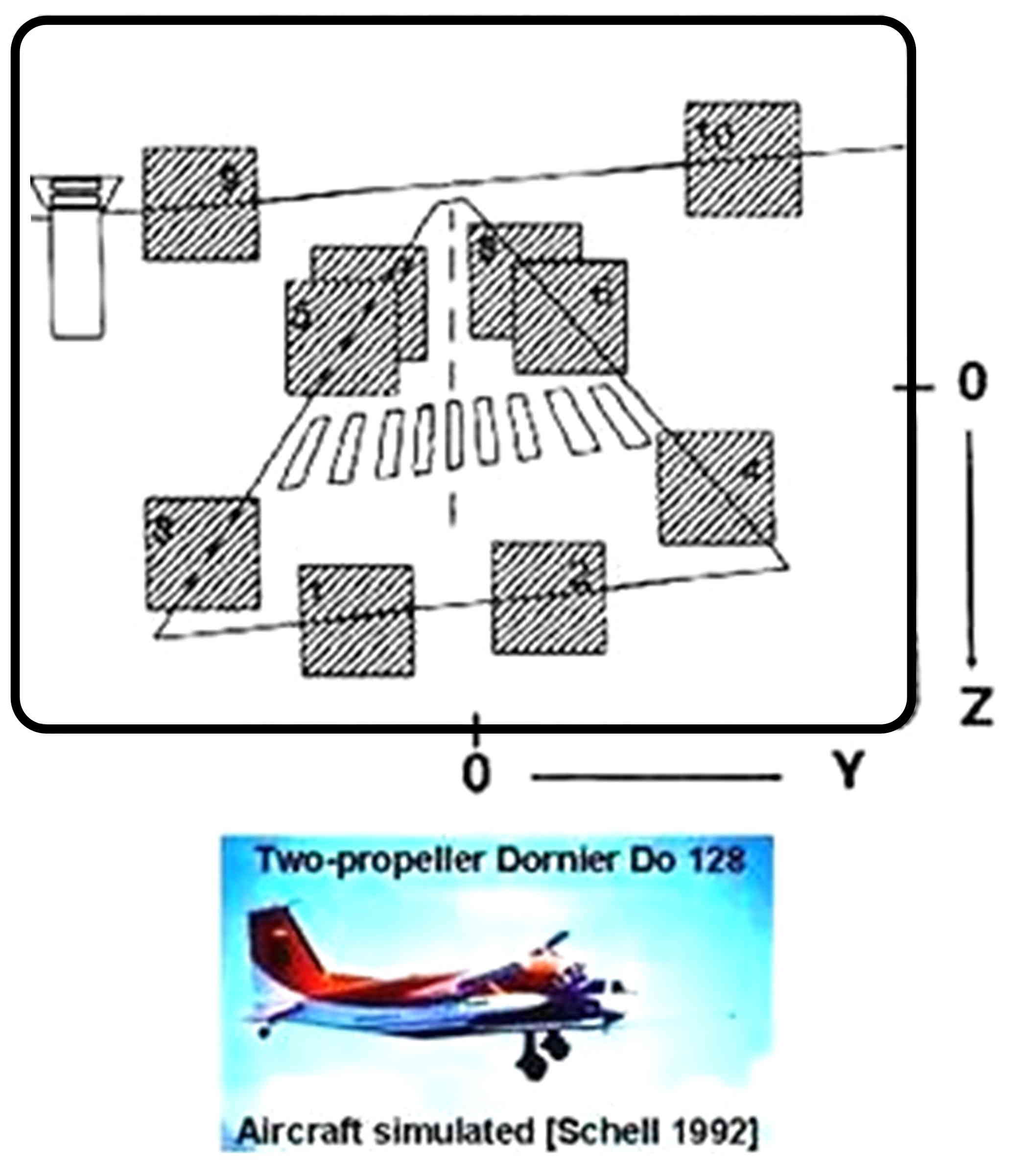

With the increasing computing power per micro-processor of about one order of magnitude every 4 to 5 years, image analysis with the BVV2 could be improved considerably towards the end of the 1980’s. The figure shows 10 evaluation windows which allowed determining each border line of the landing strip independent of a strictly rectangular shape in the real world.

- More features on the landing strip could be introduced.

- All of this allowed investigating curved landing approaches with a bifocal camera set (standard and tele-lens) on the gaze control platform.

- Inertial sensors were additionally mounted on the DBS-platform allowing fast reactions to perturbations from cross-winds and gusts without taking visual data into account.

This improved system was tuned to the bi-propeller aircraft Dornier Do 128 and fully tested in HIL-simulation. This allowed starting real flight experiments in 1991 within one week from access to the aircraft in Brunswick.

Video 1982-92 Aircraft Landing Approach.mp4

See also

H.4.2b Landing approaches with Computer Generated Images (CGI), 1992 – 1998

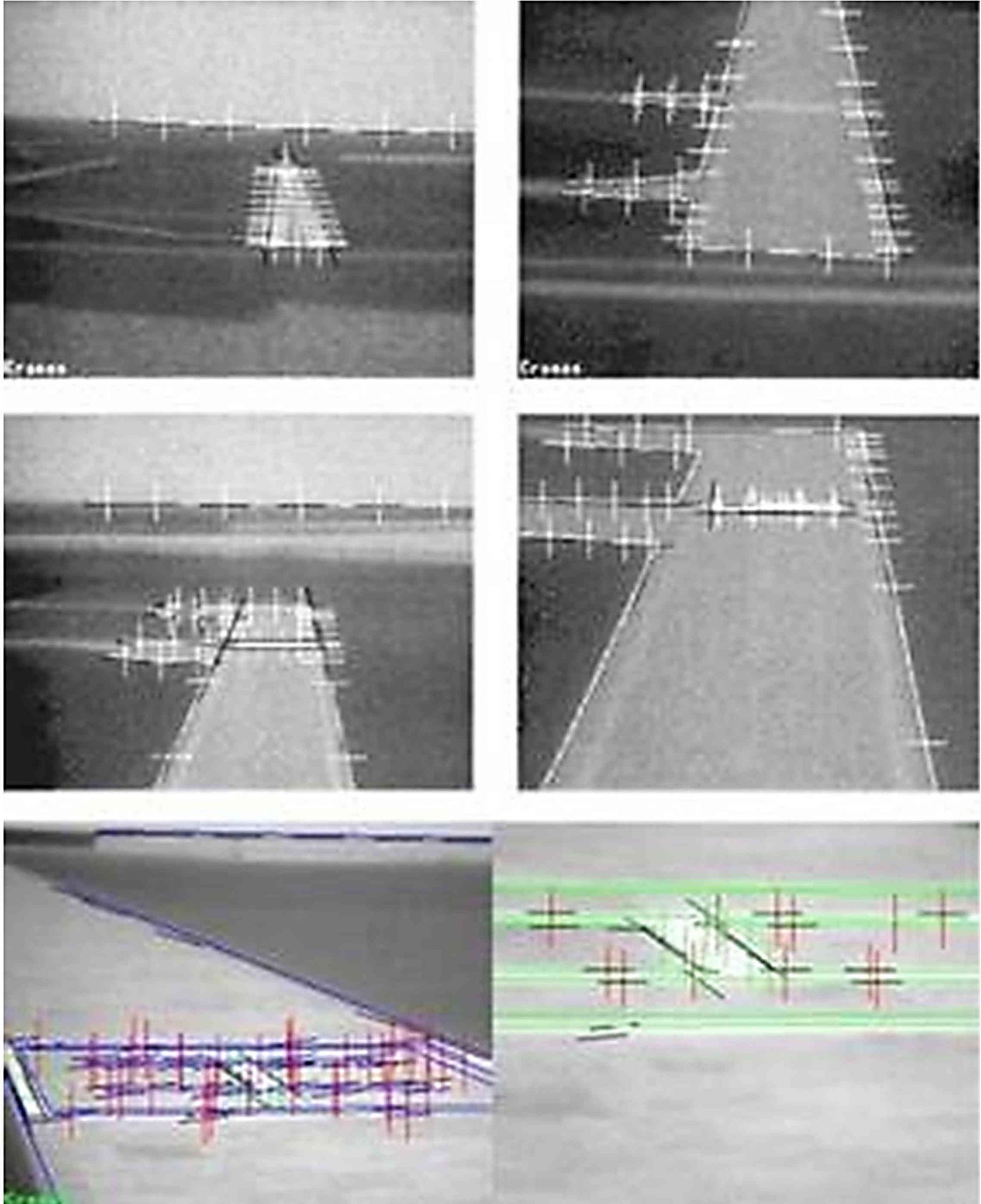

These CGI – systems allow much more natural-appearing images than vector graphics; the image evaluation task is much closer to what is needed for real flight scenes.

Landmarks like connecting taxiways can be recognized by edge- and area-based features;

rectangular shapes for marking the touch-down zone are more easily detected by their white color than by edges.

In parallel to the transition on the graphic simulation side, a transition to transputer systems for feature extraction has been done.

Increased computing power allowed attacking the challenge of obstacle detection on the runway.

For helicopter navigation, road junctions and the capital letter H marking the preferred touch-down point were simulated and visually detected by bi-focal vision (see bottom of figure).

Left: Imaged with standard lens; right: imaged with tele-lens; search paths (red) and extracted edge features (black) are superimposed.

Video – HelicopterMissionBsHIL-Sim 1997

See also

References

Schell FR (1992). Bordautonomer automatischer Landeanflug aufgrund bildhafter und inertialer Meßdatenauswertung, Dissertation, UniBwM / LRT. Kurzfassung

Werner S, Buchwieser A, Dickmanns ED (1995). Real-Time Simulation of Visual Machine Perception for Helicopter Flight Assistance. Proc. SPIE – Aero Sense, Orlando, FL

Dickmanns ED (1997). Parallels between simulation techniques for vehicle motion and perceptual integration in autonomous vehicles. Proc. European Simulation Symposium (ESS’97), Passau, pp 5-12

Fürst S, Werner S, Dickmanns ED (1997). Autonomous Landmark Navigation and Landing Approach with Obstacle Detection for Aircraft. 10th European Aerospace Conference ‘Free Flight’, Amsterdam, NL, pp 36.1 – 36.11

Werner S (1997). Maschinelle Wahrnehmung für den bordautonomen automatischen Hubschrauberflug. Diss., UniBwM / LRT. Kurzfassung