1977

Formulation of the long-term research goal ‘machine vision’ at UniBw Munich / LRT; development of a concept of the ‘Hardware-In-the-Loop’ (HIL) simulation facility for machine vision. Start procurement of components for the new building.

1978

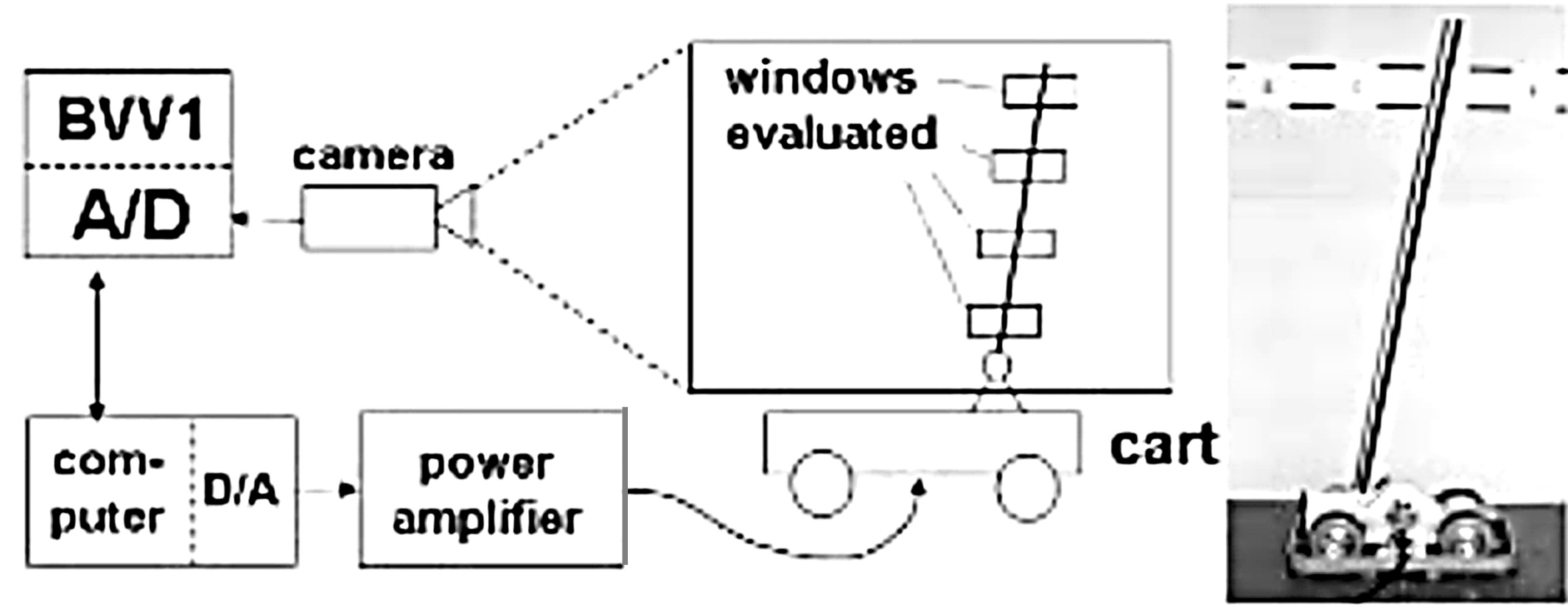

- Development of the window-concept for real-time image sequence processing and understanding. Screening of the industrial market for realization → negativ. Convince colleague V. Graefe of participation: He created a custom-designed system based on industrial micro-processors, the 8-bit system BVV1 with Intel 8085.

- Selection of the experimental system ‘pole balancing’ (Stab-Wagen-System SWS) as initial application of computer vision based on edge features (see cover top left). The Luenberger observer has been chosen as method for processing measurement data from the imaging process by perspective projection [3, D1].

1979

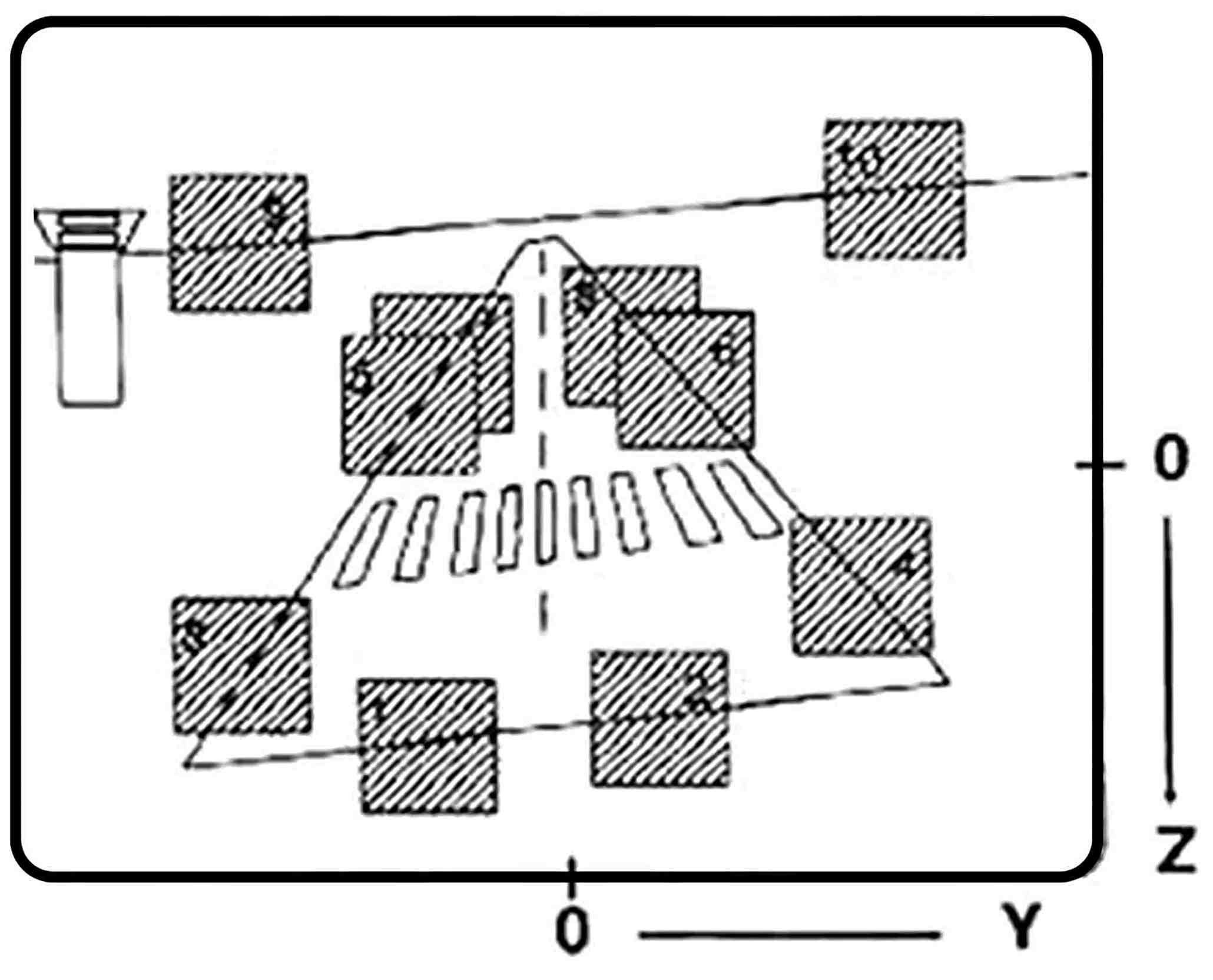

- Concept of the Satellite-Model-Plant with an ‚air-cushion-vehicle‘ hovering on an horizontal plate (of ~ 2 x 3 m) as second step towards machine vision based on corner features (see cover top right). [1 pdf, 59]

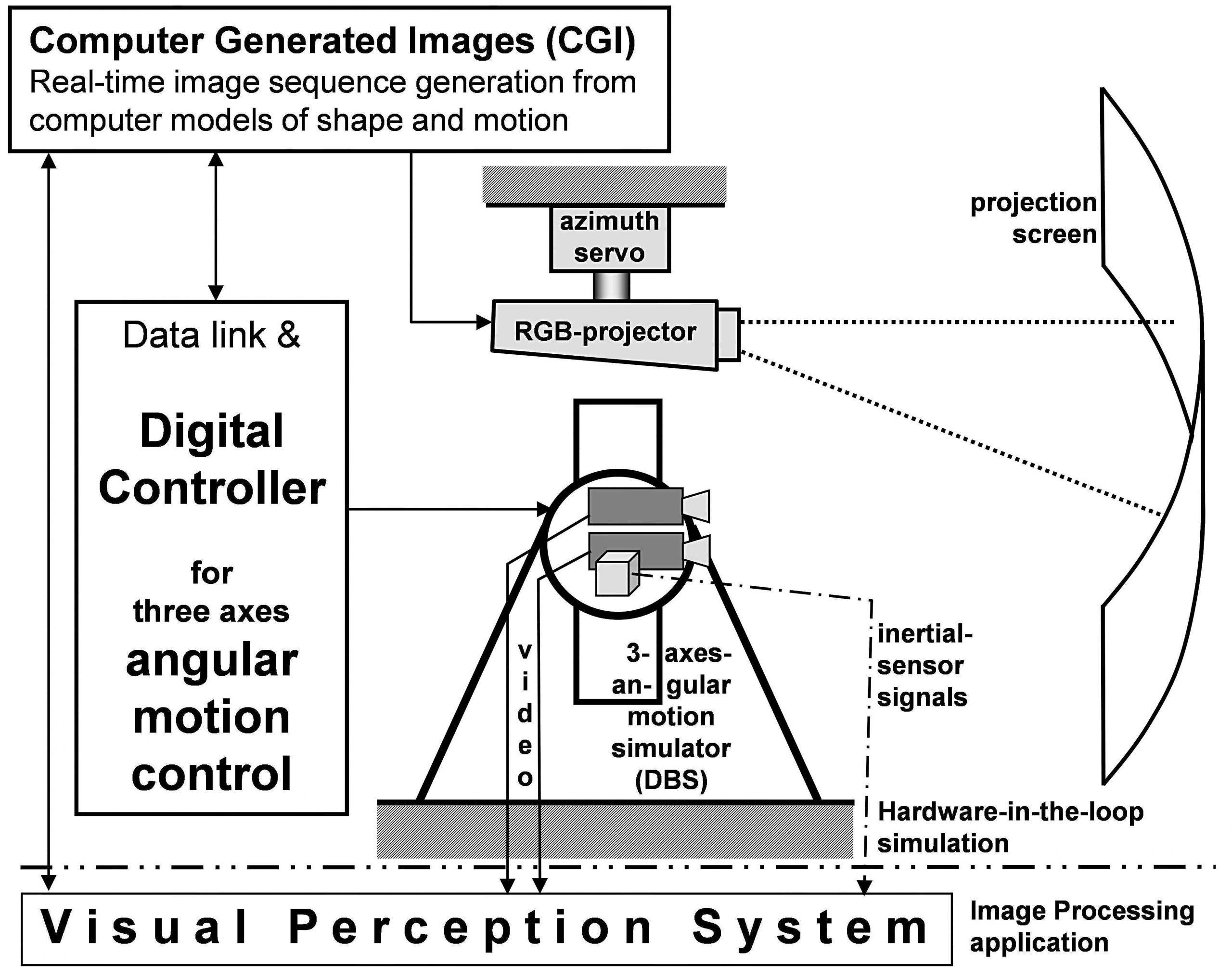

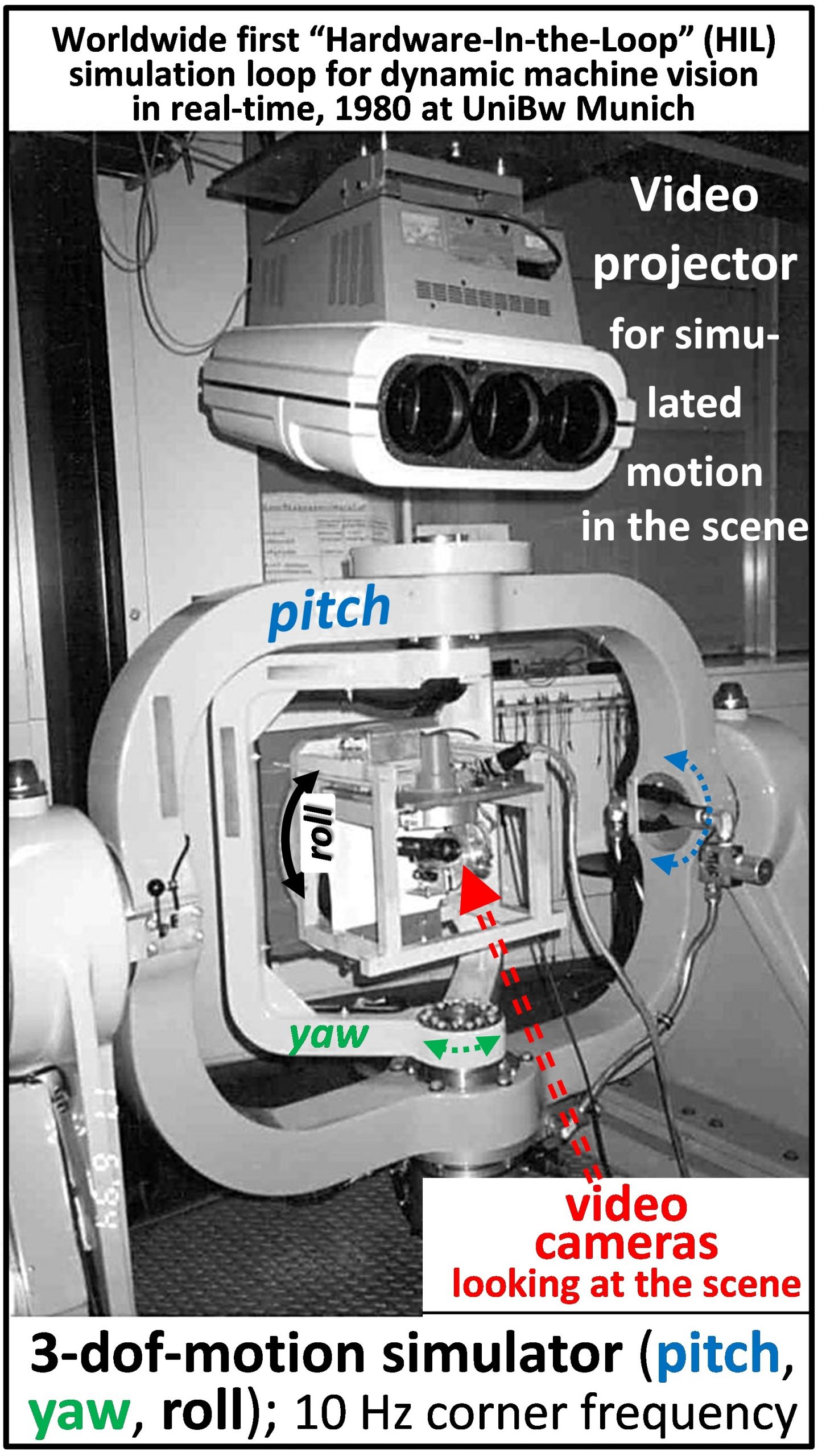

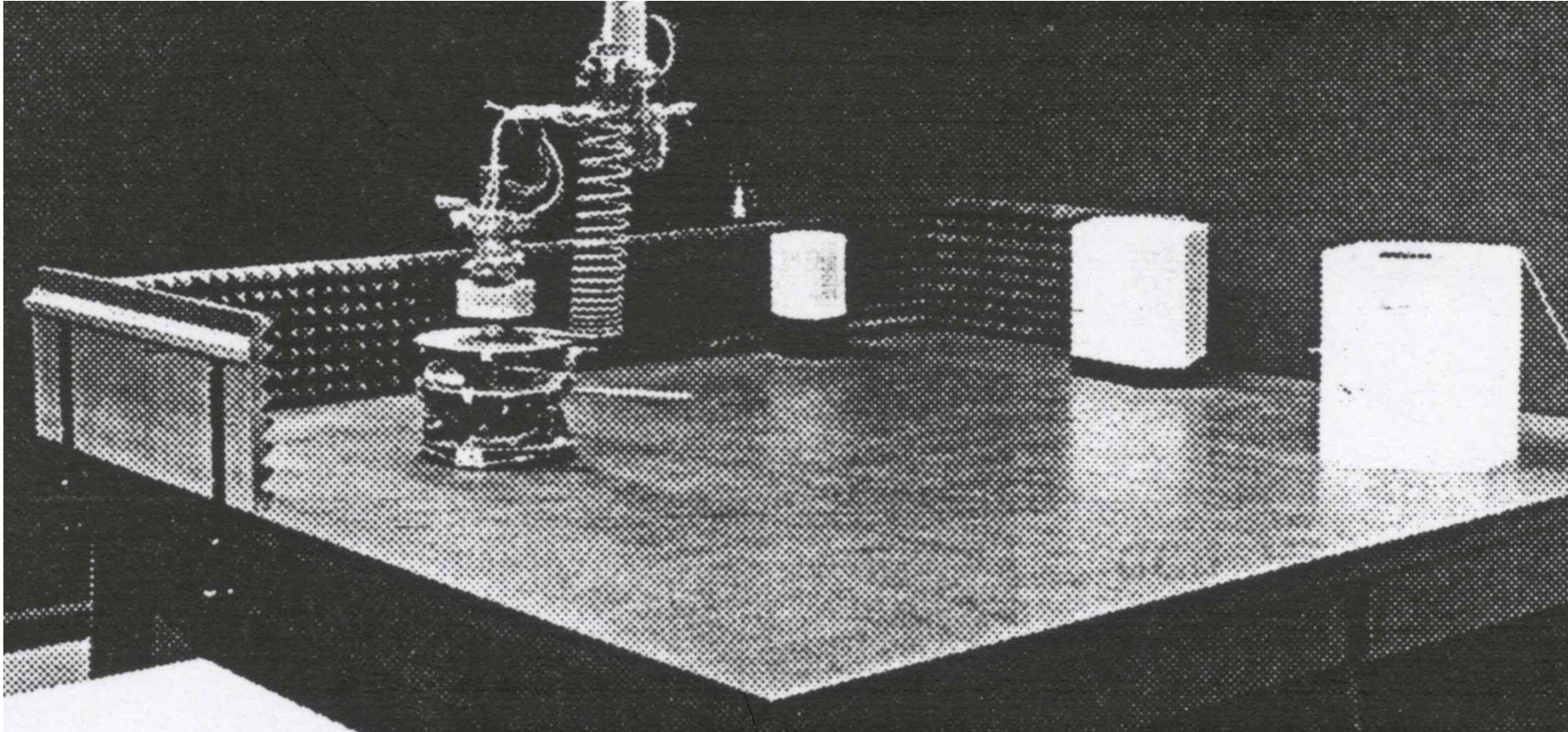

- Realization of the “Hardware-in-the-Loop” (HIL-) simulation facility with

- three-axes motion simulator for instruments and cameras,

- calligraphic vision simulation including projection onto a cylindrical screen,

- hybrid computer for real-time simulation of multi-body motion.

1980

Definition of a work plan towards real time machine vision [1 pdf].

1982

- First results with real-world components ‘BVV1 and pole balancing’ presented at external conferences: 1. Karlsruhe, and 2. NATO Advanced Study Institute (ASI), Braunlage [3, 12 Excerpts pdf , 13].

- First dissertation treating visual road vehicle guidance with HIL-simulation [D1 Kurzfassg].

- First external funding (BMFT Information – Technology) of two researchers in the field of ‘machine vision’. This allowed allocating money from the basic funds of the Institute to

- Start the investigation of aircraft landing approach by machine vision [D2 Kurzfassung].

- Initiate ‘satellite model plant’ with air-cushion vehicle (cover right)[59, D3].

1984

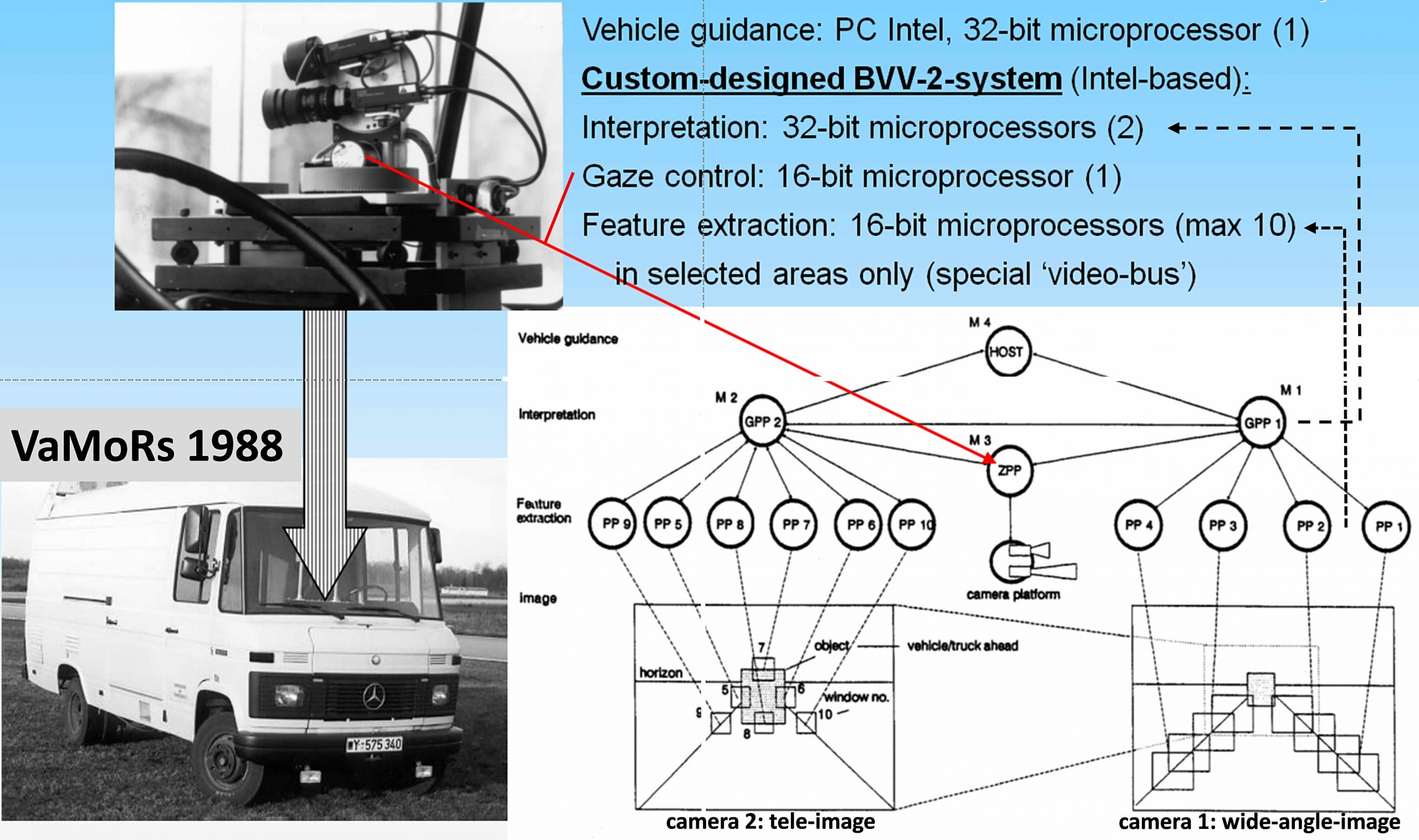

Positive results in visual road vehicle guidance with HIL-simulation lead to purchasing a 5-ton van Mercedes D-508 to be equipped as test vehicle for autonomous mobility and computer vision ‘VaMoRs’ with: A 220 V electrical power generator, actuators for steering, throttle and brakes as well as a standard twin industrial 19’’ electronics rack.

1986

- First publications of results in visual guidance of road vehicles at higher speeds using differential-geometry-curvature models for road representation [4], and for simulated satellite rendezvous and docking [59].

- First presentation of computer vision in road vehicles, to an international audience from the fields of ‘automotive engineering’ and ‘human – machine interface’ [5 pdf].

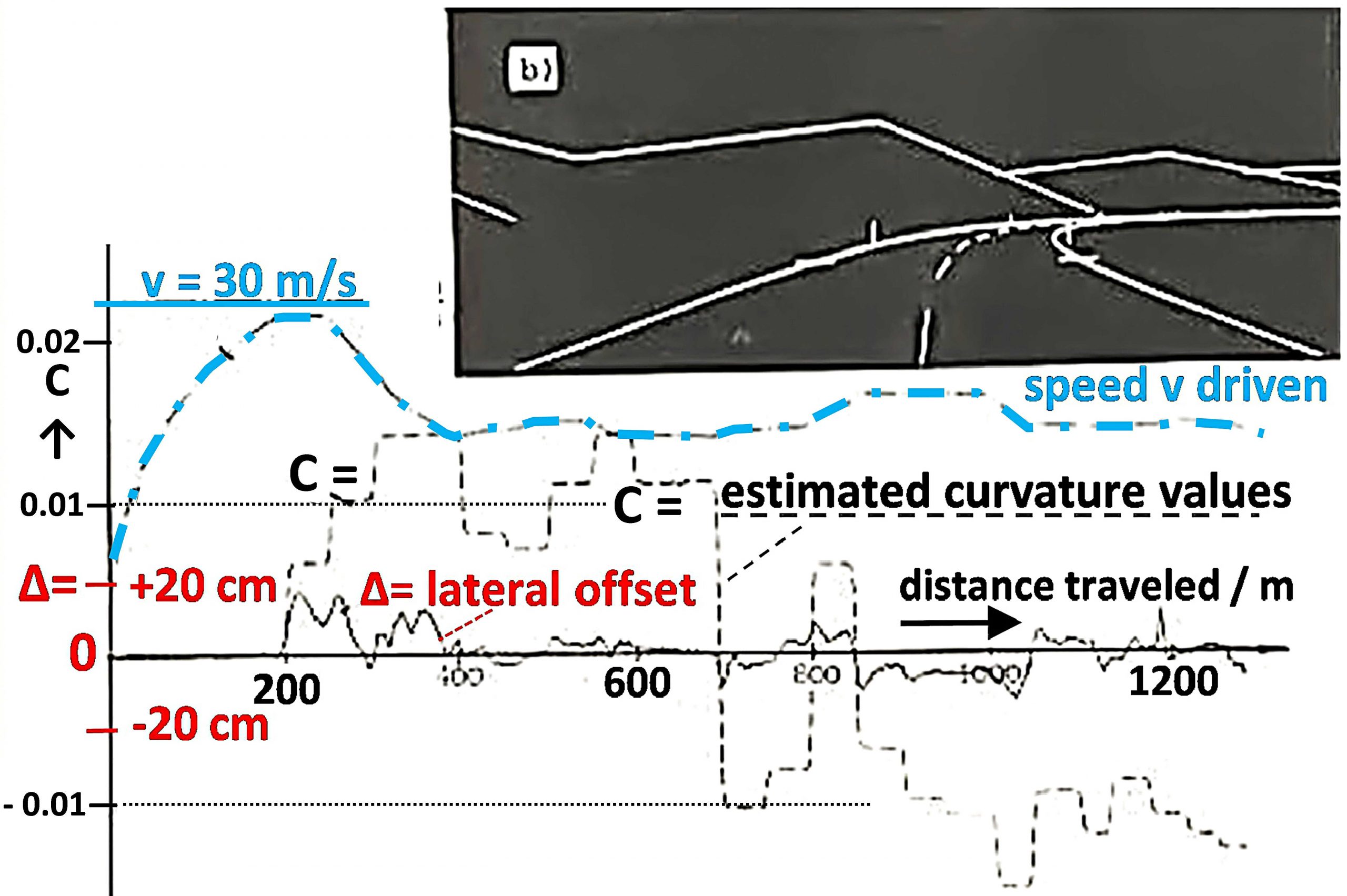

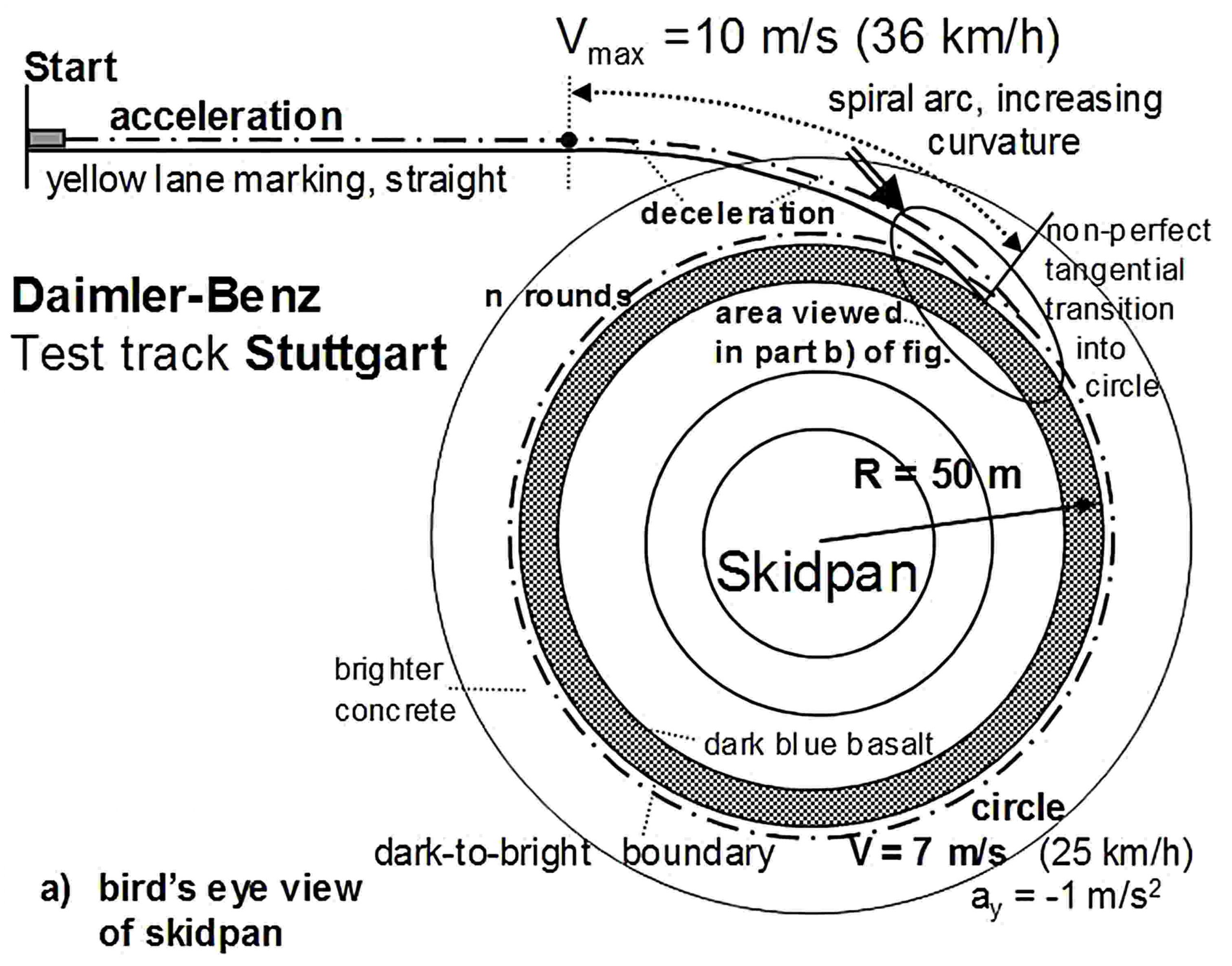

- Demo of VaMoRs in skid-pan of Daimler-Benz AG (DBAG), Stuttgart: Vmax = 10 m/s, lateral guidance by vision; longitudinal control by lateral acceleration [D4 Kurzfassg; 79 Content, page 214].

1987

Year of several breakthroughs (see also [88a]) in real-time vision for guidance of (road) vehicles

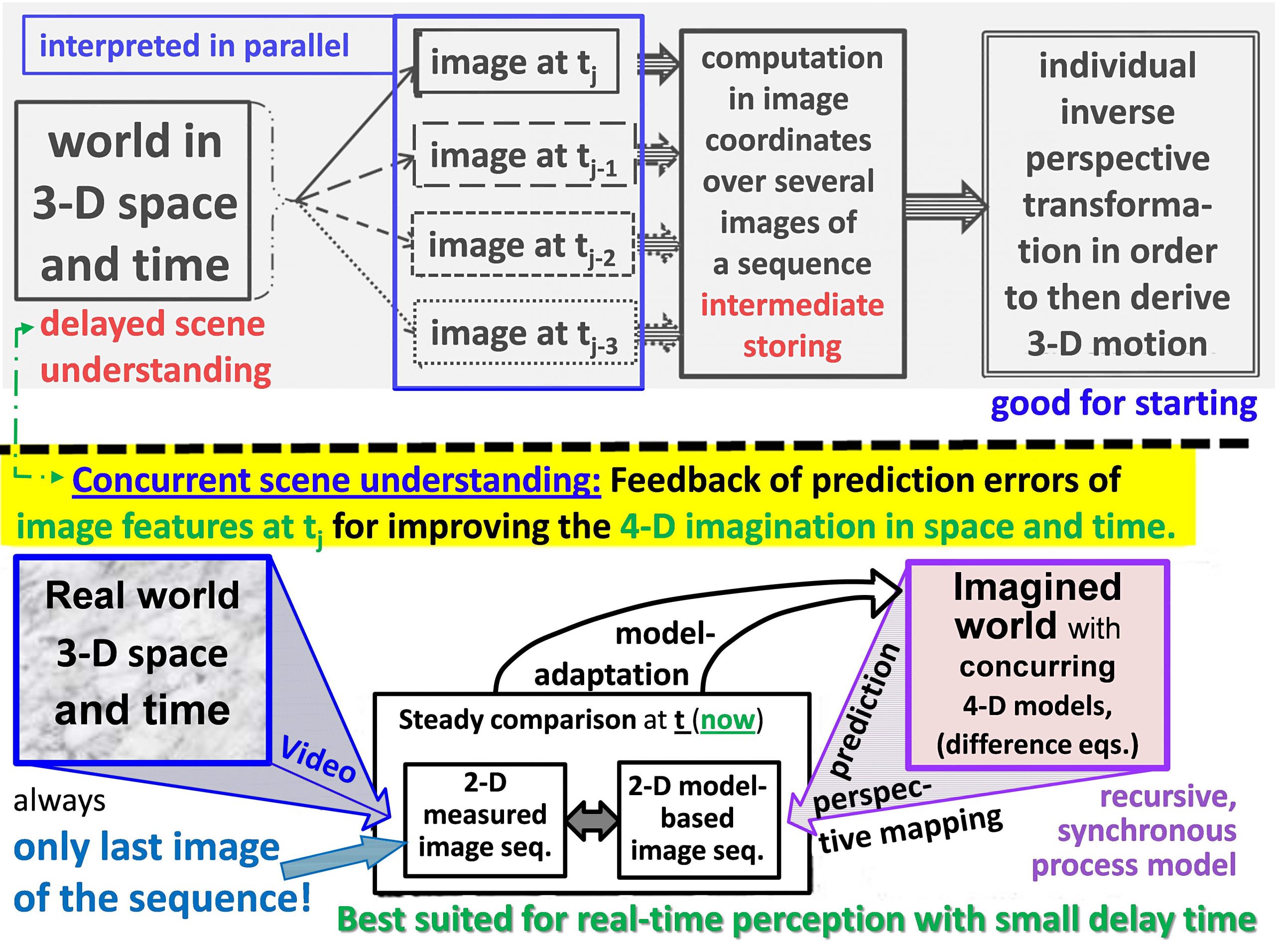

Formulation of the 4-D approach with spatiotemporal world models: The objects to be perceived are those in the real outside world; sensor signals are used to install and improve an internal representation (model) in the perceiving agent (dubbed ‘subject’ here, maybe either a biologic or a robotic one). [4; 7 pdf]

Video Autonomous driving in rain

Perception is achieved by feedback of prediction errors for objects in the vicinity hypothesized for the point ‘Here & Now’. Subjects generate the internal representations according to a fusion of both sensor signals received (as well as features derived therefrom) and generic (parameterized) models available in their knowledge base [7, 8 pdf, 9].

Hypotheses are accepted as perceptions of real-world objects (and of course other subjects) if the sum of all quadratic prediction errors over several video frames remains small. This approach allows dealing with the last video image only (no storage of images required!); the information from all previous images is captured in the best estimated state variables and parameters of the hypotheses accepted. This was a tremendous practical advantage in the 1980s [12 Excerpts pdf].

1987

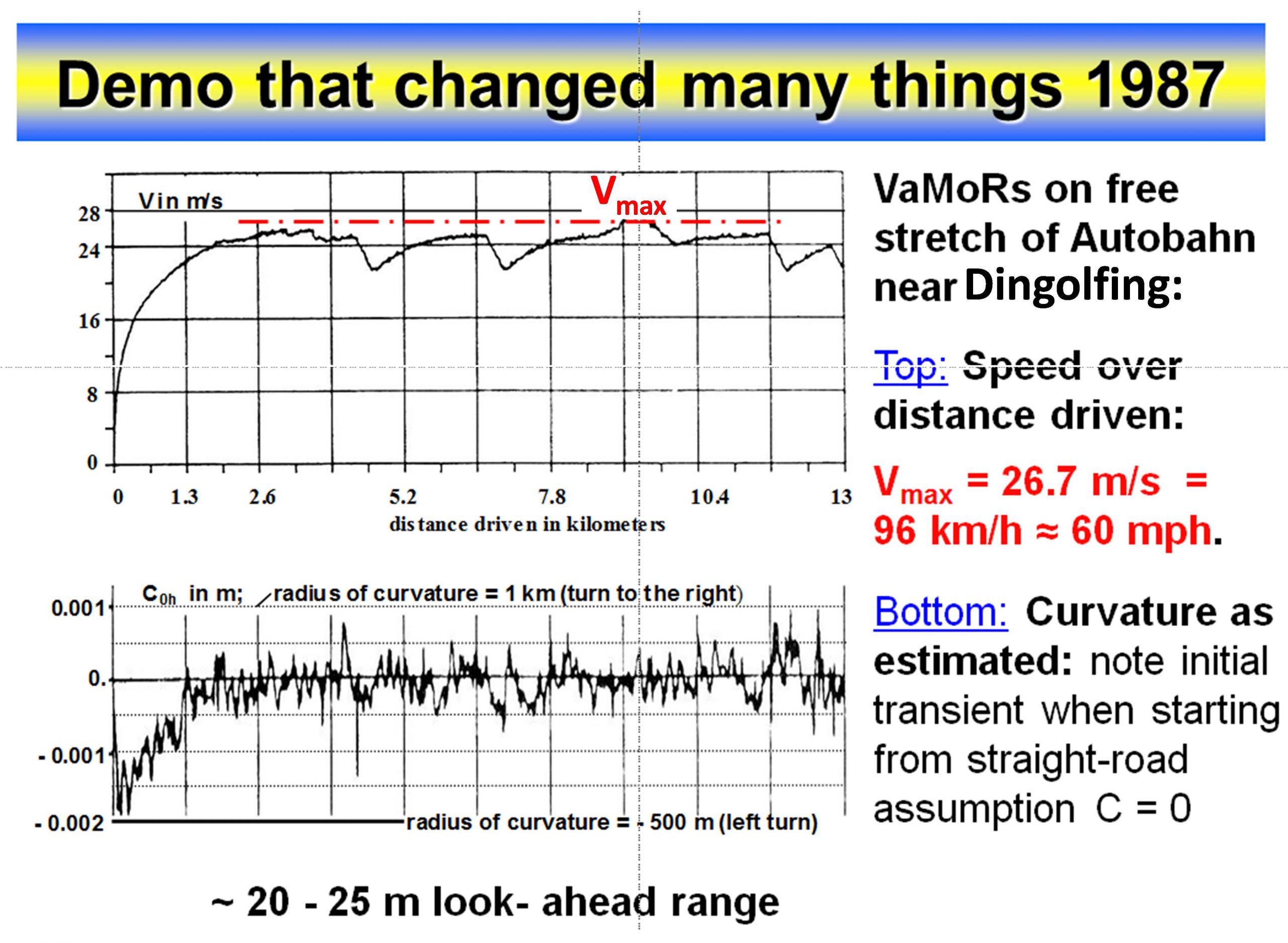

Successful tests in high-speed driving on a free stretch of Autobahn over more than 20 km at speeds up to the maximum speed of VaMoRs of 96 km/h (60 mph) have been achieved in the summer. [D4 Kurzfassung; 79 Content, page 216]

Video VaMoRs on Autobahn 1987 no obstacles

1987 / 1988

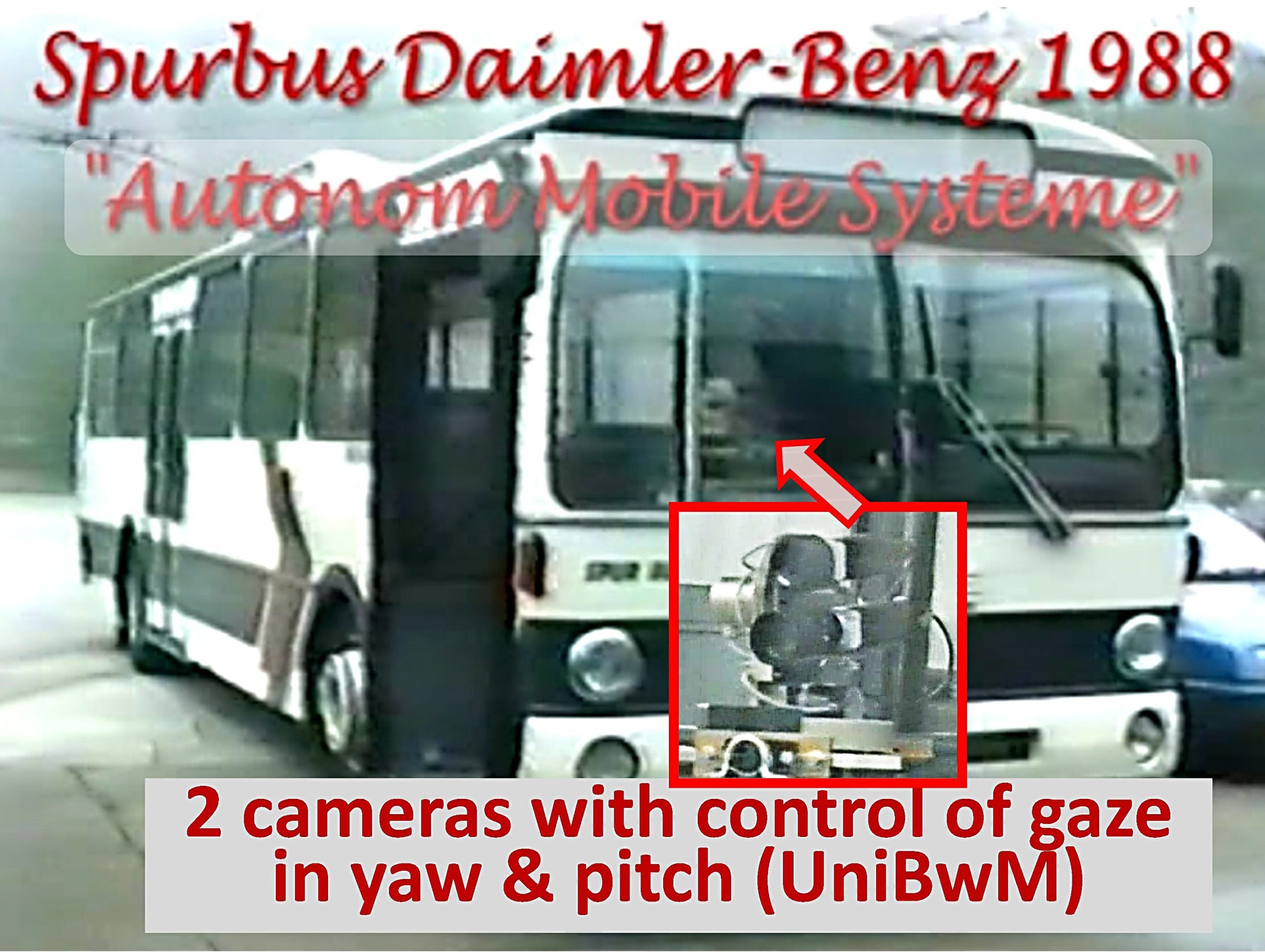

1987/88 first BMFT-project with Daimler-Benz AG (DBAG) starts: ‘Autonom Mobile Systeme’ (AMS).

Machine vision becomes part of the 7-year PROMETHEUS-project: Pro-Art [6, 10 pdf]. Many European automotive companies and universities (~ 60) join the project.

1988

After the successful funding of the first project on ‚Road vehicles with a sense of vision‘, the head of the Department for Research and Technology of the Federal Republic of Germany, Dr. Riesenhuber, came to Neubiberg to experience by himself a test ride with VaMoRs and to give a press conference on this new achievement.

Time line 1988 till 1992

(First-generation vision systems BVV2)

1988 / 1989

- (First internat. TV-report BBC-Tomorrow’s World: ‘Self drive van’ video with BBC)

Video 1988 BBC Self Drive Van.mp4

- First summarizing publication on dynamic machine vision [12 pdf exc.]

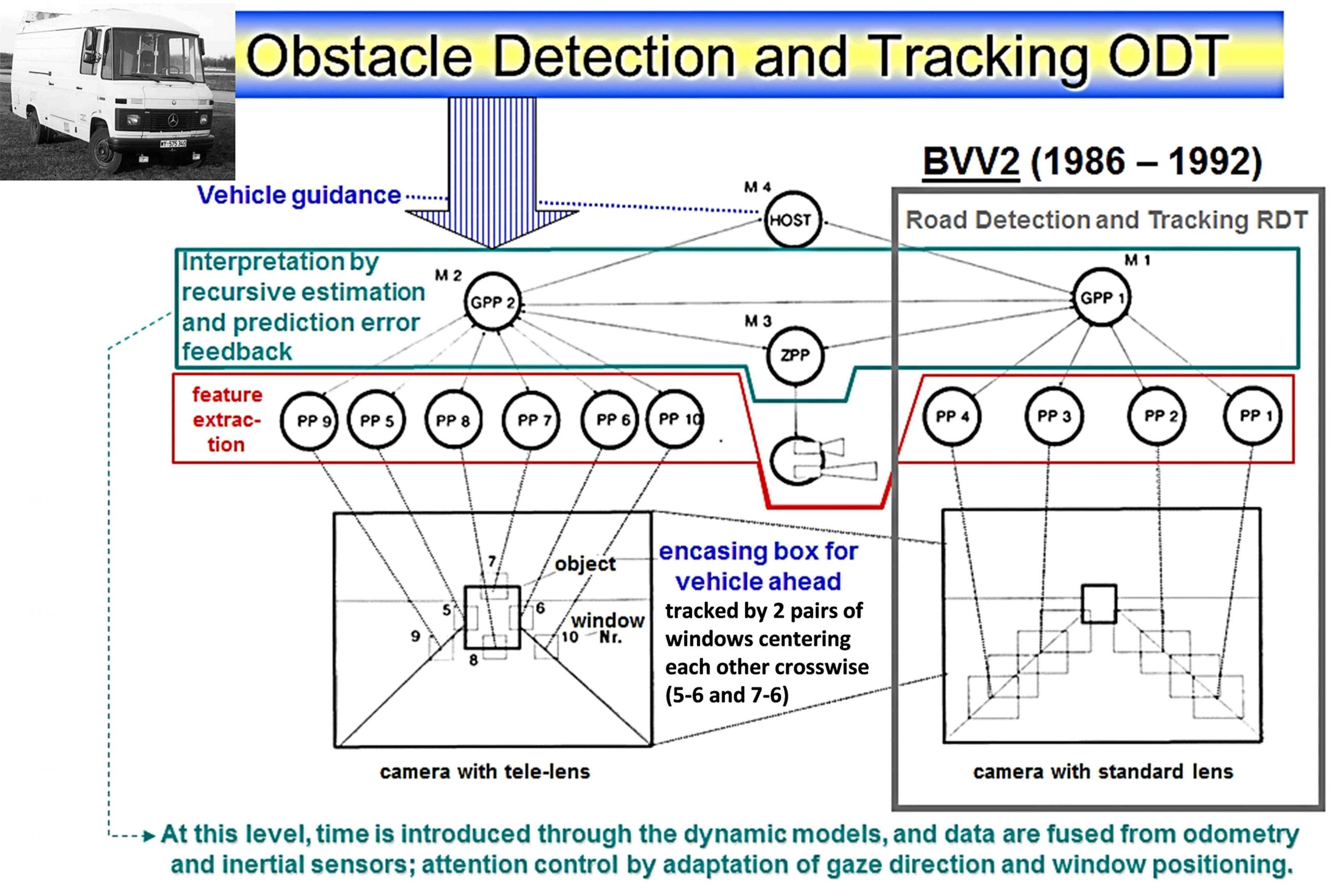

- Stopping in front of a stationary obstacle with VaMoRs / Spurbus (DBAG) from speeds up to 40 km/h; final demo of AMS-project [14 pdf].

Video 1990 DissMy.mp4 (initial part)

Video 1988 DB-Spurbus RastattGazeCurature.mp4

Video 1988 VaMoRs-Rastatt_ODT-AMS_Demo.mp4

- Invited Keynote on “The 4-D approach to real-time vision” at the Int. Joint Conf. on Artificial Intelligence (IJCAI) in Detroit [13] (video IJCAI).

- Definition of term “subject” for general objects with senses & actuators [15 pdf].

- Concept for simultaneous estimation of shape and motion parameters of ground vehicles [17 pdf; D10 Kurzfassg]; derivation of control time histories for a car visually observed.

1990

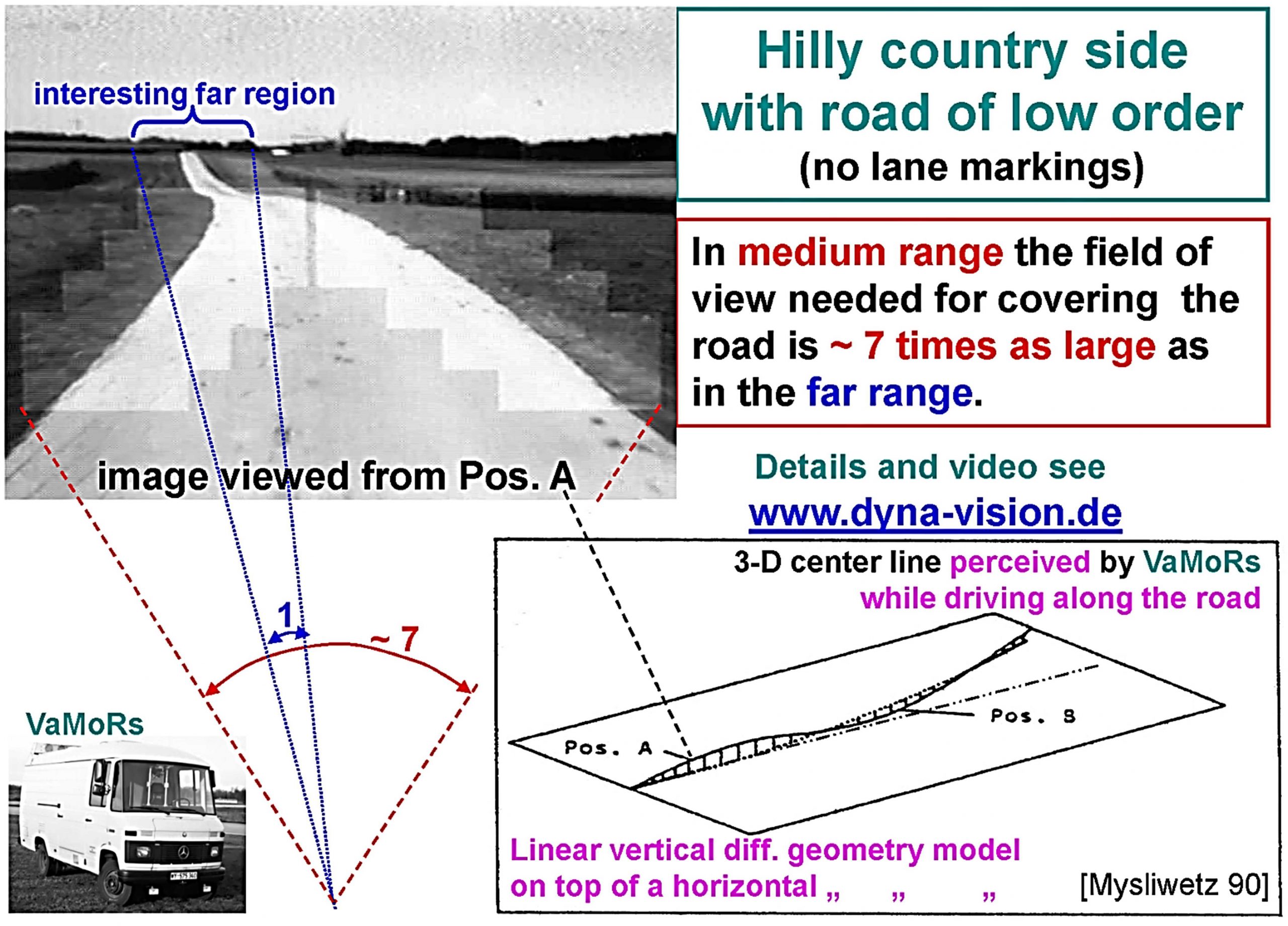

- Development of the superimposed linear curvature models for roads with horizontal & vertical curvatures in hilly terrain [D5 Kurzfassg; 79, section 9.2, Content];

Video 1990 DissMy.mp4 (final part)

1991

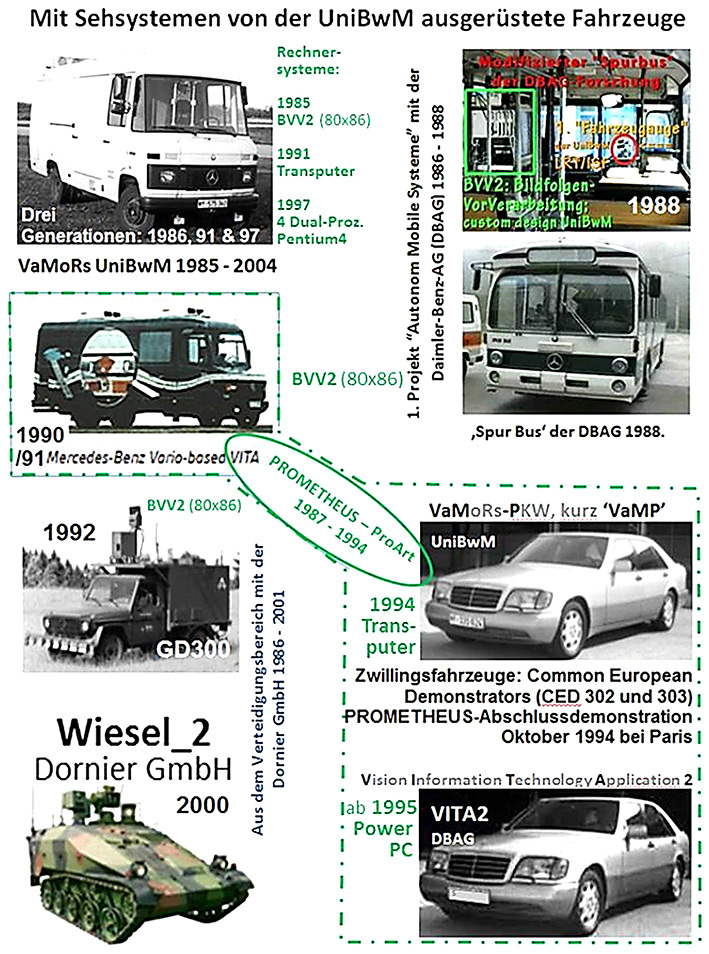

- UniBwM equips the 2nd vehicle of DBAG capable of vision, dubbed ‘Vision Information Technology Application’ (VITA, later VITA1), with a replica of Prof. Graefe’s BVV2 and with the improved software systems for perception & control.

Video 1991 Turin VisionBasedStop BehindCar.mp4

- Visual autonomous ‘Convoy-driving’ at the Prometheus midterm-demo in Torino, Italy, with ‘VITA’ (see left-hand side) at speeds 0 < km/h < 60 [16].

- (Limited) Handling of crossroads at night with headlights only.

The higher management levels of automotive industry start accepting computer vision as valid goal in the Prometheus project.

1992

- Decision for the ‘Common European Demonstrators’ (CED) of DBAG for the final demo of Prometheus in 1994 to be large sedans capable of driving in standard French Autoroute traffic with guests on board.

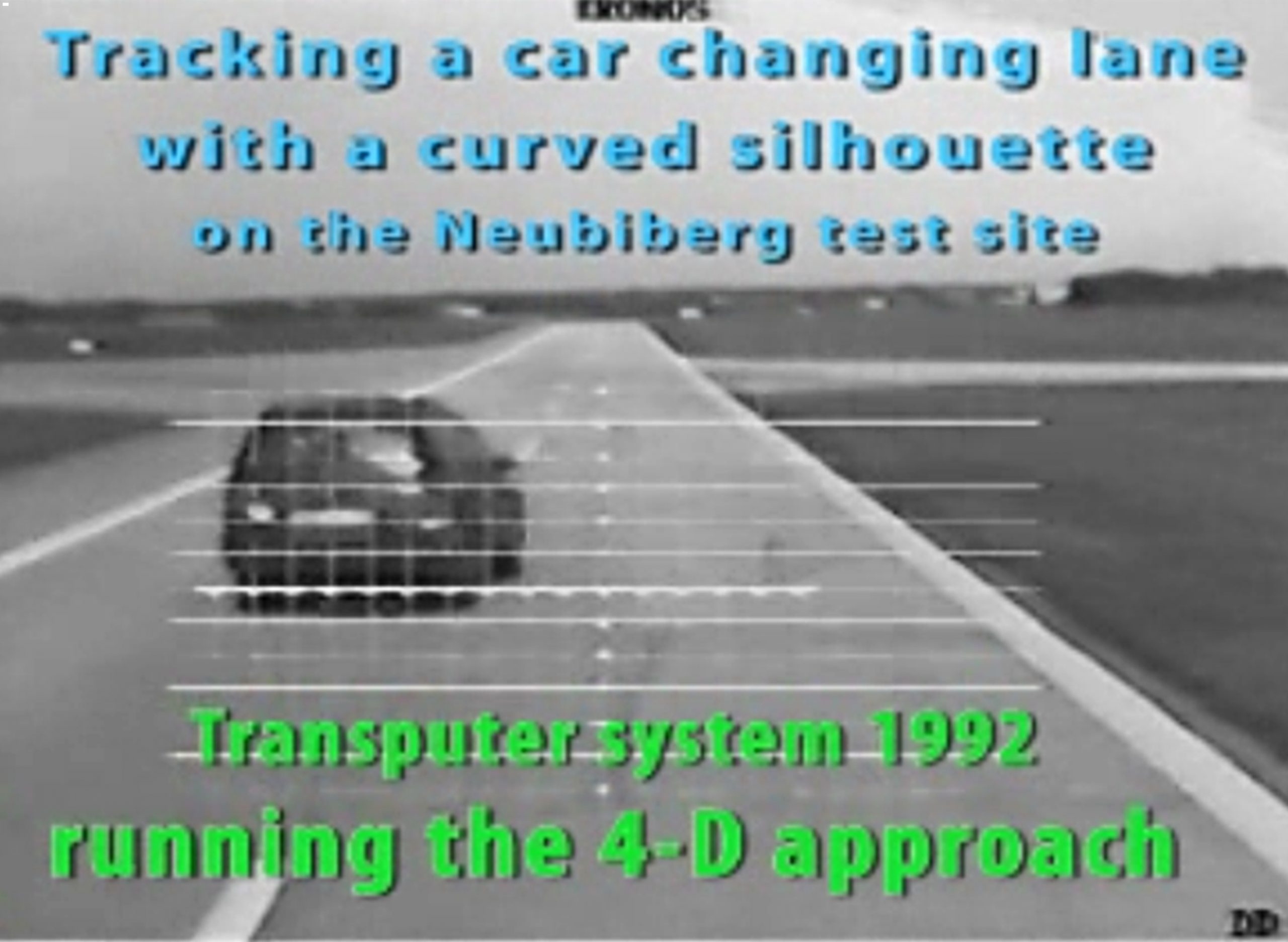

- Switch to ‘Transputers’ as processors for image sequence understanding.

Video 1992 Van Car-Tracking with Transputer.mp4

- Invited contribution to special issue of IEEE-PAMI: ‘Interpretation of 3-D scenes’ 1992): Recursive 3-D Road and Relative Ego-State Recognition [21 Abstr+introd].

1993 – 1995

Together with defense industry equip a cross-country vehicle GD 300 (see image at left) with the sense of vision for handling unsealed roads.

Video 1992 Truck-Tracking-Lane-Changes.mp4

Figure Braking gaze stabilization

Results in gaze stabilization by direct negative feedback of the sensed inertial pitch rate are shown in the figure at the left side.

Time line 1993 till 1997

(Second-generation vision systems: Transputers)

1993

DBAG and UniBwM acquire a Mercedes 500 SEL each for transformation into the CED for 1994: DBAG performs the mechanical works needed (conventional sensors added, 24 V additional power supply etc.) for both vehicles, while UniBwM is in charge of the bifocal vision systems for the front and the rear hemisphere: CED 302 = VITA-2 (DBAG) with additional cameras looking sideways, room for 3 guests; CED 303 = VaMoRs-PKW (short VaMP of UniBwM), 1 guest, for easier testing.

1994

Final demo PROMETHEUS & Int. Symp. on Intelligent Vehicles in Oct. Paris [23 a) pdf, 23b) Abstract]; performance shown by machine vision (without Radar and Lidar) in public three-lane traffic on Autoroute-1 near Paris with guests on board:

- Free lane running up to the maximally allowed speed in France of 130 km/h.

- Transition to convoy-driving behind an arbitrary vehicle in front with distance depending on speed driven;

- Detection and tracking of up to six vehicles by bifocal vision both in the front and the rear hemisphere in the own lane and the directly neighboring ones.

- Autonomous decision for lane change and autonomous realization, after the safety driver has agreed to the maneuver by setting the blink light.

Video 1994 Twofold LaneChange Paris.mp4

In total more than 1000 km have been driven by the two vehicles without accident on the three-lane Autoroutes around Paris.

1995

Our toughest international competitor CMU (under T. Kanade) had performed a long-distance demonstration in USA driving from the East- to the West Coast with lateral control performed autonomously during the summer of 1995 (see figure above) over multiple intervals, receiving much publicity.Since in Europe the next generation of transputers failed to appear, UniBwM with DBAG switched from transputers to Motorola Power-PC with more than 10-fold computing performance; this allowed reducing the number of processors in their vision systems by a factor of five, while doubling evaluation rate to 25 Hz.

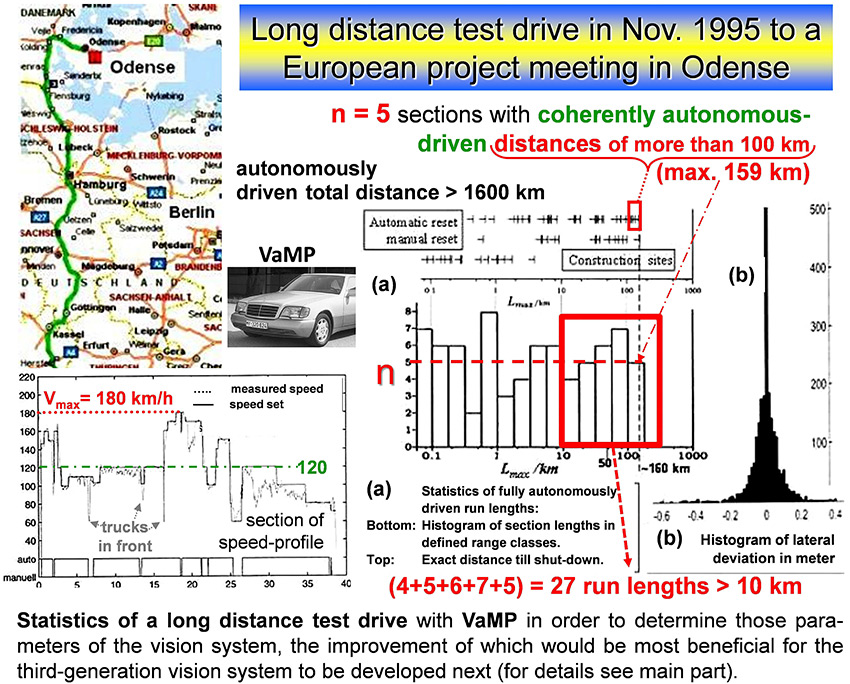

In response to the US-demonstration (above), VaMP (UniBwM) performed a long-distance test drive from Munich to a project meeting in Odense (Denmark) with many fully autonomous sections (both lateral and longitudinal guidance with binocular black-and-white vision relying on flexible edge extraction). About 95% of the distance tried has been driven autonomously, yielding > 1600 km in total. [D18 Kurzfassung]

The goal was finding those regions and components of the task promising best progress with the 3rd-generation vision system to be started next; in the northern plains, Vmax,auton ~ 180 km/h has been achieved [79, Section 9.4.2.5 Content].

Conclusions drawn:

- Increase look-ahead range to about 200 m for lane recognition;

- Introduce at least one color camera for differentiating between white and yellow lane markings at construction sites and for reading traffic signs.

- Add a) region-based features for better object recognition, and b) precise gaze control for object tracking and inertial gaze stabilization.

- Recognition of crossroads without in-advance-knowledge of parameters, determination of the angle of intersection and road width.

Autonomous turn-offs (VaMoRs, in the defense realm) [24 pdf]. For details see: [88b pdf]

1996

Decision for ending cooperation with DBAG; development of longer-term concept for a high-level dynamic vision system: “Expectation-based, Multi-focal, Saccadic” (EMS-) Vision in a joint German – US American cooperation.

As an example see Video 1994 GazeControl TrafficSign Saccade.mp4 showing the capabilities of gaze fixation on a moving object, and saccadic perception of traffic sign while moving by).

Time line 1997 till 2004

(Third-generation vision systems: COTS)

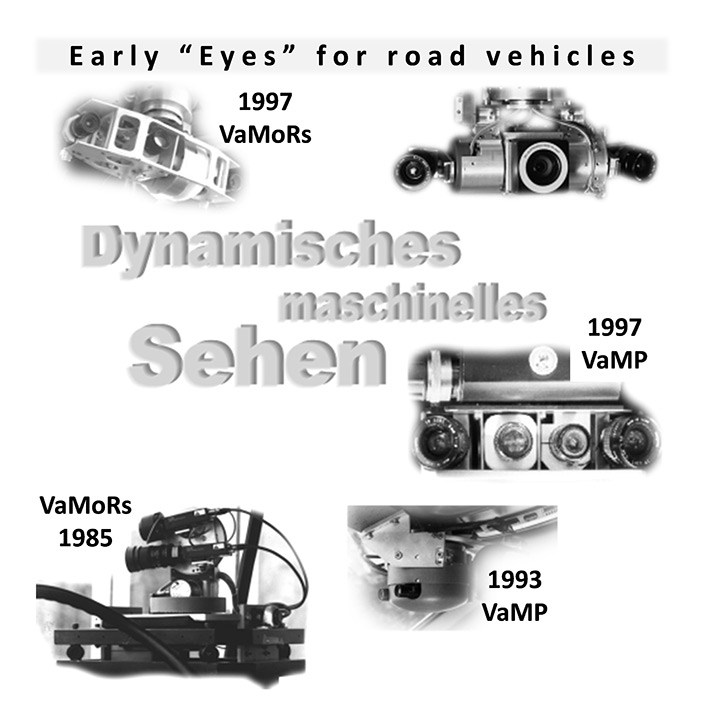

1997

Development of 3rd-generation dynamic vision system with Commercial-Off-The-Shelf (COTS-) components: EMS-vision with all capabilities (developed at UniBwM separately over the last two decades) integrated together with first ‘real-time/full-frame stereo vision’ to be contributed by one American partner (former Sarnoff Research Center, SRI and Pyramid Vision Technology (PVT) Princeton, NJ) [25, 26]. The goal was flexible high-level vision without the need for much in-advance information (dubbed here: ‘Scout-type vision’). Video ‘1996 Turn-off taxiway Nbb-taxiway.mp4. On the higher system levels, two approaches were pursued in parallel:

- On the American side the so-called 4D/RCS-approach of Jim Albus, National Inst. of Standards and Technology (NIST), Gainsborough MD [27, 28] was used; system integration went up to the level of military command & control, implemented both in the HMMWV of NIST and in the test vehicles of the US-industrial partner Gen. Dynamics, the eXperimental Unmanned Vehicle (XUV).

- On the German side, EMS-vision with specific capabilities for a) perception, b) generation of object/subject hypotheses, c) situation assessment and decision making, and d) control computation and output. It has been implemented on a COTS-system with 4 x Dual Pentium_4 together with ‘Scalable Coherent Interface’ and 2 blocks of transputers as interface to real-time gaze- and vehicle control. After development of the system with VaMoRs it has been transferred by the German industrial partner Dornier GmbH to the tracked test vehicle Wiesel_2 (see left-hand side).

1998 / 2000

Transformation of separate capabilities into the EMS-framework and integration of “Expectation-based, Multi-focal, Saccadic” (EMS) vision. Video 2000 VaMoRs NegObstacles SeriesEMS-Vision.mp4

Simplified version in VaMP for bifocal “Hybrid Adaptive Cruise Control” [D30 Kurzfassung; 33e) pdf]

A more detailed elaboration of this page may be found under link [88c) pdf].

See below for details in Mission performance

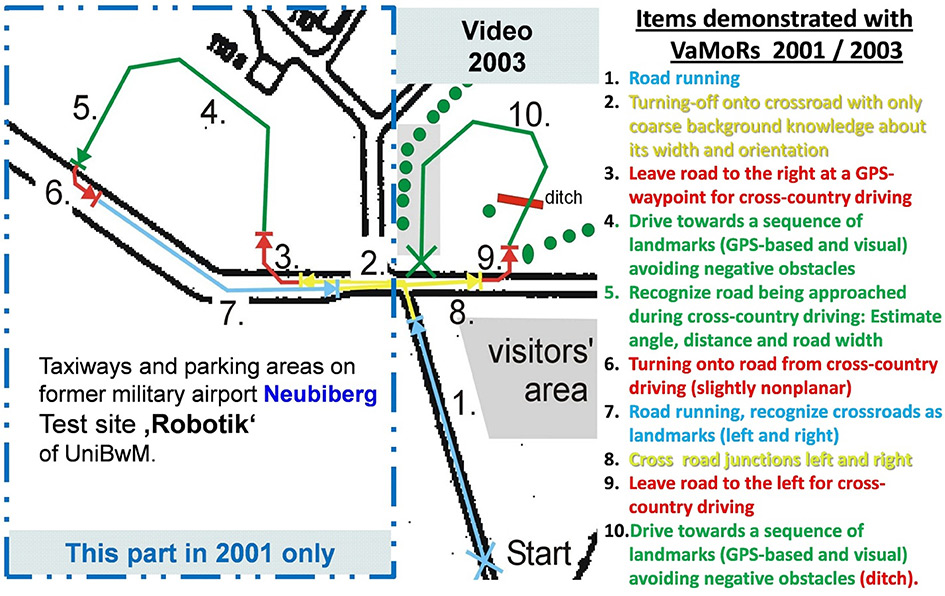

2001

Final demo with VaMoRs in Germany 2001 showed ‘Mission performance’ with 10 mission elements on test track Neubiberg (see below) [29 – 46, D25 – D30; Kurzfassg ‘Komponenten’, Kurzfassg ‘Fähigkeiten’, Kurzfassg ‘Fahrbahnerkennung’, Kurzfassg ‘Verhaltensentscheidung’]. The separate Sarnoff-PVT stereo system in 2001 had a volume of about 30 liter.

Video 2001 EMS-VaMoRs Trinoc. Turn left.mp4.

USA: Congress defines as goal for 2015: 1/3 of ground vehicles for battling shall be capable of driving autonomously. {This triggered the Grand- und Urban Challenges of DARPA for the years 2004 to 2007.}

Video 2003 EMS-On-OffroadDriving VaMoRs.mp4. “Scout-type” vision on network of minor roads.

2003

2003

In total, over two decades UniBwM/LRT/ISF, till 1991 in cooperation with the ‘Institut für Messtechnik’ (Prof. V. Graefe), has equipped seven road vehicles with three generations of real-time vision systems for autonomous driving as shown in the following figure:

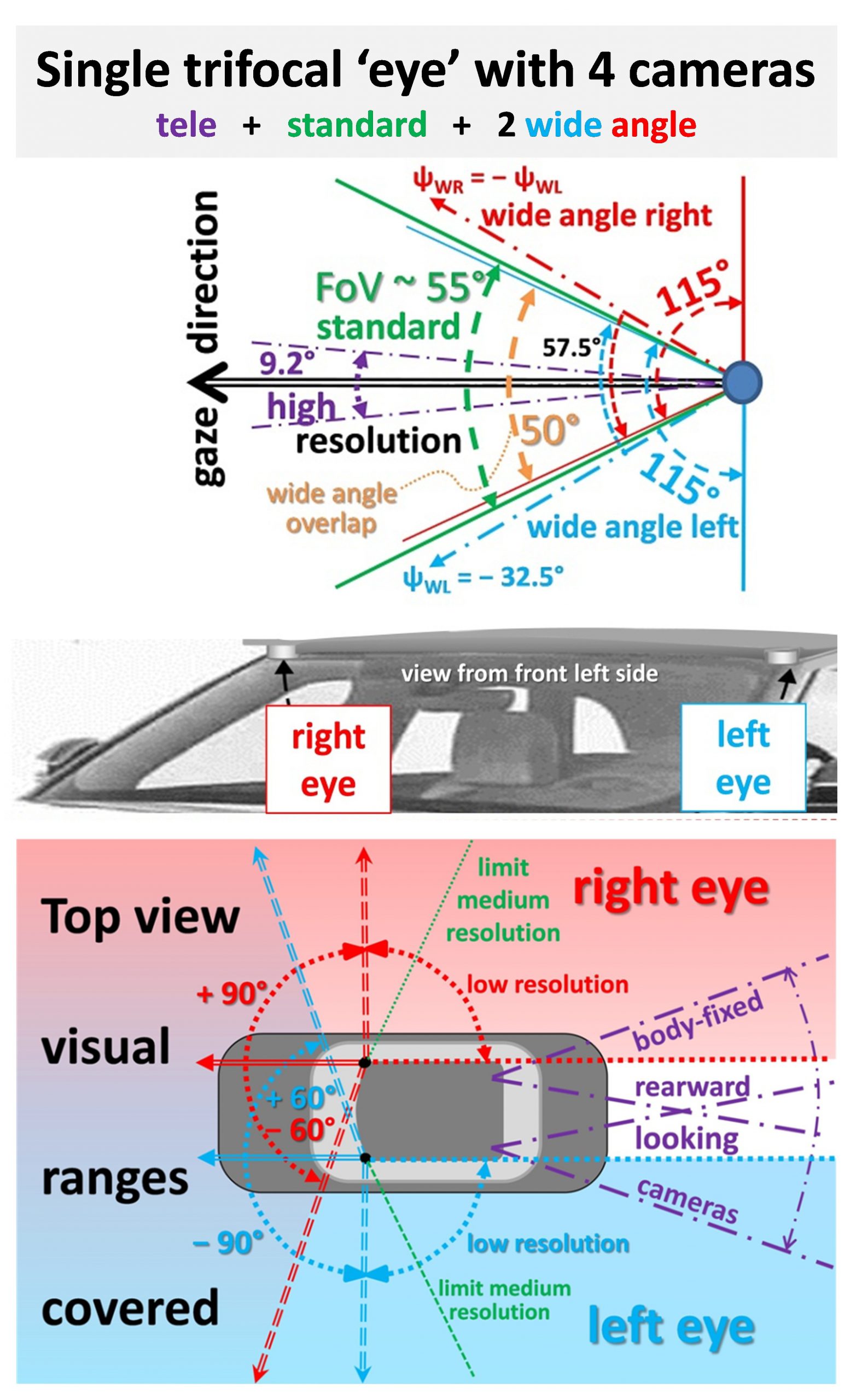

With respect to efficient extraction of several kinds of image features in road scenes with a single method, the result is described in [D 2012]. A survey on the developments done in the field of a sense of vision for road vehicles may be found in [D 2017] (in English) and in [D 2019] (in German language). Publications in this field have been concluded by [D 2020]. The question is raised, why the predominant solution in biological vision systems, namely pairs of eyes (very often multi-focal and gaze-controllable), has not been found in technical systems up to now, though it may be a useful or even optimal solution for vehicles too. Two potential candidates with perception capabilities closer to the human sense of vision are discussed in some detail: one with all cameras mounted in a fixed way onto the body of the vehicle, and one with a multi-focal gaze-controllable set of cameras. Such compact systems are considered advantageous for many types of vehicles if a human level of performance in dynamic real-time vision and detailed scene understanding is the goal. Increasingly general realizations of these types of vision systems to be developed may take all of the 21st century; the concluding figure shows just one potential candidate system.

References

[D 2012] Dickmanns E. D.: Detailed Visual Recognition of Road Scenes for Guiding Autonomous Vehicles. pp. 225-244, in Chakraborty S. and Eberspächer J. (eds): Advances in Real-Time Systems, Springer, (355 pp)

[D 2017] Dickmanns E. D.: Developing the Sense of Vision for Autonomous Road Vehicles at the UniBwM. IEEE-Computer, Dec. 2017. Vol. 50, no. 12. Special Issue: SELF-DRIVING CARS, pp. 24 – 31

[D 2019] Dickmanns E. D.: Fahrzeuge lernen Sehen – Der 4-D Ansatz brachte 1987 den Durchbruch. Naturwissenschaftliche Rundschau. Heft 2 (Febr.), 2019; NR 848, Seiten 51-60

[D 2020] Dickmanns E. D.: May a pair of ‘Eyes’ be optimal for vehicles too? Electronics 2020, 9(5), 759; https://doi.org/10.3390/electronics9050759 (This article belongs to the Special Issue ‘Autonomous Vehicles Technology’).